- For all $j,k \in \{1,\ldots,m\}$ such that $j\neq k$ we have $\langle u_j, u_k \rangle = 0$.

- For all $k \in \{1,\ldots,m\}$ we have $\langle u_k, u_k \rangle \gt 0$. (This in fact means that all the vectors in this set are nonzero vectors.)

- I post another example of Singular Value Decomposition with a short discussion of the reduced Singular Value Decomposition and the pseudoinverse (Moore-Penrose inverse).

-

Example 2. Here is a calculation of a singular value decomposition of the matrix

\[

A = \left[\!\begin{array}{rrr}

3 & -1 & 1 \\

-1 & 3 & 1 \\

1 & 1 & 1 \\

1 & 1 & 1 \end{array}\right].

\]

- (I) To find the singular values and right singular vectors we calculate the matrix \[ A^\top \!A = \left[\!\begin{array}{rrrr} 3 & -1 & 1 & 1 \\ -1 & 3 & 1 & 1 \\ 1 & 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] = \left[\!\begin{array}{rrr} 12 & -4 & 4 \\ -4 & 12 & 4 \\ 4 & 4 & 4 \end{array}\right] = 4 \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \end{array}\right]. \] Observe that adding the first two columns and subtracting twice the third column gives the zero vector. Hence $\lambda_3 = 0$ is an eigenvalue of $A^\top\!A$ and a corresponding eigenvector is $\bigl[ -1 \ -1 \ \ 2 \bigr]^\top$. Since each row of $A^\top\!A$ sums to $12$, $\lambda_2 = 12$ is an eigenvalue of $A^\top\!A$ and a corresponding eigenvector is $\bigl[ 1 \ \ 1 \ \ 1 \bigr]^\top$. Since the vector $\bigl[ 1 \ -1 \ \ 0 \bigr]^\top$ is orthogonal to both earlier found eigenvectors it also must be an eigenvector of $A^\top\!A$. The corresponding eigenvalue is $\lambda_1 = 16$. Thus the singular values of $A$ are $\sigma_1 = 4$ and $\sigma_2 = 2\sqrt{3}$, and the matrices $\Sigma$ and $V$ are as follows \[ \Sigma = \left[\!\begin{array}{rrr} 4 & 0 & 0 \\ 0 & 2\sqrt{3} & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{array}\right] \qquad V = \left[\!\begin{array}{rrr} \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & -\frac{1}{\sqrt{6}} \\ -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & -\frac{1}{\sqrt{6}} \\ 0 & \frac{1}{\sqrt{3}} & \frac{2}{\sqrt{6}} \end{array}\right] = \bigl[ \mathbf{v}_1 \ \mathbf{v}_2 \ \mathbf{v}_3 \bigr]. \]

- (II) To find a $4\!\times\!4$ orthogonal matrix $U$ we first normalize vectors \[ A \left[\!\begin{array}{r} 1 \\ -1 \\ 0 \end{array}\right] = \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{r} 1 \\ -1 \\ 0 \end{array}\right] = \left[\!\begin{array}{r} 4 \\ -4 \\ 0 \\ 0 \end{array}\right] = 4 \left[\!\begin{array}{r} 1 \\ -1 \\ 0 \\ 0 \end{array}\right], \quad \text{hence} \quad \mathbf{u}_1 = \left[\!\begin{array}{r} \frac{1}{\sqrt{2}} \\ -\frac{1}{\sqrt{2}} \\ 0 \\ 0 \end{array}\right], \] and \[ A \left[\!\begin{array}{r} 1 \\ 1 \\ 1 \end{array}\right] = \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{r} 1 \\ 1 \\ 1 \end{array}\right] = \left[\!\begin{array}{r} 3 \\ 3 \\ 3 \\ 3 \end{array}\right] = 3 \left[\!\begin{array}{r} 1 \\ 1 \\ 1 \\ 1 \end{array}\right], \quad \text{hence} \quad \mathbf{u}_2 = \left[\!\begin{array}{r} \frac{1}{2} \\ \frac{1}{2} \\ \frac{1}{2} \\ \frac{1}{2} \end{array}\right]. \] From the general considerations about the singular value decomposition we know that the singular values and left and right singular vectors must satisfy: $A\mathbf{v}_1 = \sigma_1 \mathbf{u}_1$ and $A\mathbf{v}_2 = \sigma_2 \mathbf{u}_2$. Next we verify these equalities: \[ \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{r} \frac{1}{\sqrt{2}} \\ -\frac{1}{\sqrt{2}} \\ 0 \end{array}\right] = 4 \left[\!\begin{array}{r} \frac{1}{\sqrt{2}} \\ -\frac{1}{\sqrt{2}} \\ 0 \\ 0 \end{array}\right] \quad \text{and} \quad \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{r} \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array}\right] = 2\sqrt{3} \left[\!\begin{array}{r} \frac{1}{2} \\ \frac{1}{2} \\ \frac{1}{2} \\ \frac{1}{2} \end{array}\right] \] It has been established in class that $\mathbf{u}_1$ and $\mathbf{u}_2$ form an orthonormal basis for $\operatorname{Col}A$.

- (III) To complete the matrix $U$ we need an orthonormal basis for $\mathbb{R}^4$. Since the space $\operatorname{Nul}\bigl(A^\top\bigr)$ is the orthogonal complement of $\operatorname{Col}A$, we can simply find the nullspace of $A^\top$, and then find two orhonormal vectors in $\operatorname{Nul}\bigl(A^\top\bigr).$ Here we go: \[ \textstyle \left[\!\begin{array}{rrrr} 3 & -1 & 1 & 1 \\ -1 & 3 & 1 & 1 \\ 1 & 1 & 1 & 1 \end{array}\right] \sim \left[\!\begin{array}{rrrr} 1 & 1 & 1 & 1 \\ 0 & 4 & 2 & 2 \\ 0 & -4 & -2 & -2 \end{array}\right] \sim \left[\!\begin{array}{rrrr} 1 & 1 & 1 & 1 \\ 0 & 1 & 1/2 & 1/2 \\ 0 & 0 & 0 & 0 \end{array}\right] \sim \left[\!\begin{array}{rrrr} 1 & 0 & 1/2 & 1/2 \\ 0 & 1 & 1/2 & 1/2 \\ 0 & 0 & 0 & 0 \end{array}\right] \] Thus, \[ \operatorname{Nul}\bigl(A^\top\bigr) = \left\{ s \left[\!\begin{array}{r} -1 \\ -1 \\ 0 \\ 2 \end{array}\right] + t \left[\!\begin{array}{r} -1 \\ -1 \\ 2 \\ 0 \end{array}\right] \ : \ s, t \in \mathbb{R} \right\}. \] All the vectors in $\operatorname{Nul}\bigl(A^\top\bigr)$ are orthogonal to $\mathbf{u}_1$ and $\mathbf{u}_2$ (verify this). There are many pairs of orthonormal vectors in $\operatorname{Nul}\bigl(A^\top\bigr).$ One pair that cough my attention is obtained with $s=1/2$, $t=1/2$ and $s=1/2$, $t=-1/2$ and then normalized. That is the pair \[ \mathbf{u}_3 = \left[\!\begin{array}{r} -\frac{1}{2} \\ - \frac{1}{2} \\ \frac{1}{2} \\ \frac{1}{2} \end{array}\right] \quad \text{and} \quad \mathbf{u}_4 = \left[\!\begin{array}{c} 0 \\ 0 \\ -\frac{1}{\sqrt{2}} \\ \frac{1}{\sqrt{2}} \end{array}\right] \] Finally, \[ U = \left[\!\begin{array}{rrrr} \frac{1}{\sqrt{2}} & \frac{1}{2} & -\frac{1}{2} & 0 \\ -\frac{1}{\sqrt{2}} & \frac{1}{2} & -\frac{1}{2} & 0 \\ 0 & \frac{1}{2} & \frac{1}{2} & -\frac{1}{\sqrt{2}} \\ 0 & \frac{1}{2} & \frac{1}{2} & \frac{1}{\sqrt{2}} \end{array}\right]. \]

- Remark To find vectors $\mathbf{u}_3$ and $ \mathbf{u}_4$ it might be slightly more efficient to proceed in the following way. Since we know that $\mathbf{u}_1$ and $ \mathbf{u}_2$ form a basis for $\operatorname{Col} A$ we can find a basis for $(\operatorname{Col} A)^{\perp}$ by solving the system \[ \left[\!\begin{array}{rrrr} 1 & -1 & 0 & 0 \\ 1 & 1 & 1 & 1 \end{array}\right] \left[\!\begin{array}{c} x_1 \\ x_2 \\ x_3 \\ x_4 \end{array}\right] = \left[\!\begin{array}{c} 0 \\ 0 \end{array}\right] \] The row reduction of the matrix \[ \left[\!\begin{array}{rrrr} 1 & -1 & 0 & 0 \\ 1 & 1 & 1 & 1 \end{array}\right] \sim \cdots \sim \left[\!\begin{array}{rrrr} 1 & 0 & 1/2 & 1/2 \\ 0 & 1 & 1/2 & 1/2 \end{array}\right] \] might be simpler than the row reduction that we did in (III).

- To celebrate our work we verify \[ \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] = \left[\!\begin{array}{rrrr} \frac{1}{\sqrt{2}} & \frac{1}{2} & -\frac{1}{2} & 0 \\ -\frac{1}{\sqrt{2}} & \frac{1}{2} & -\frac{1}{2} & 0 \\ 0 & \frac{1}{2} & \frac{1}{2} & -\frac{1}{\sqrt{2}} \\ 0 & \frac{1}{2} & \frac{1}{2} & \frac{1}{\sqrt{2}} \end{array}\right] \left[\!\begin{array}{rrr} 4 & 0 & 0 \\ 0 & 2\sqrt{3} & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{rrr} \frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} & 0 \\ \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{6}} & -\frac{1}{\sqrt{6}} & \frac{2}{\sqrt{6}} \end{array}\right] . \]

- The reduced Singular Value Decomposition of $A$ is (we drop the vectors that belong to the nullspaces of $A$ and $A^\top$ and the zero rows and columns of $\Sigma$) \[ \left[\!\begin{array}{rrr} 3 & -1 & 1 \\ -1 & 3 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{array}\right] = \left[\!\begin{array}{rr} \frac{1}{\sqrt{2}} & \frac{1}{2} \\ -\frac{1}{\sqrt{2}} & \frac{1}{2} \\ 0 & \frac{1}{2} \\ 0 & \frac{1}{2} \end{array}\right] \left[\!\begin{array}{rr} 4 & 0 \\ 0 & 2\sqrt{3} \end{array}\right] \left[\!\begin{array}{rrr} \frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} & 0 \\ \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{3}} \end{array}\right] . \]

-

The pseudoinverse of $A$ is

\[

A^+

=

\left[\!\begin{array}{rr}

\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} \\

-\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} \\

0 & \frac{1}{\sqrt{3}} \end{array}\right]

\left[\!\begin{array}{cc}

\frac{1}{4} & 0 \\

0 & \frac{1}{2\sqrt{3}} \end{array}\right]

\left[\!\begin{array}{rrrr}

\frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} & 0 & 0 \\

\frac{1}{2} & \frac{1}{2} & \frac{1}{2} & \frac{1}{2} \end{array}\right]

= \left[\!\begin{array}{rrrr}

\frac{5}{24} & -\frac{1}{24} & \frac{1}{12} & \frac{1}{12} \\

-\frac{1}{24} & \frac{5}{24} & \frac{1}{12} & \frac{1}{12} \\

\frac{1}{12} & \frac{1}{12} & \frac{1}{12} & \frac{1}{12} \end{array}\right]

\]

The following four properties make the pseudoinverse unique and special:

- The matrix $A^+A$ is symmetric.

- The matrix $AA^+$ is symmetric.

- $AA^+A = A$

- $A^+AA^+ = A^+$

- Suggested problems for Section 7.4: 3, 7, 11, 13, 14, 15, 17, 21

-

Example 1. The following $4\!\times\!5$ matrix is used as an example of Singular Value Decomposition on Wikipedia. \[ M = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right]. \] Since this matrix has a lot of zero entries it should not be hard to find its SVD. Remember, SVD is not unique, so if the SVD that we find is different from what is on Wikipedia, it does not mean that it is wrong.

On Tuesday I posted a calculation of a Singular Value Decomposition of the above matrix by calculating a Singular Value Decomposition of its transpose $M^\top.$ For you to see the difference, I will calculate below a Singular Value Decomposition of $M$ directly.

- (I) To find the singular values and right singular vectors of $M^\top$ we calculate the matrix \[ M^\top M = \left[\!\begin{array}{rrrr} 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 2 \\ 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 2 & 0 & 0 & 0 \end{array}\!\right] \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\!\right] = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 4 & 0 & 0 & 0 \\ 0 & 0 & 9 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 2 & 0 & 0 & 0 & 4 \end{array}\!\right]. \] To calculate the eigenvalues of this matrix we first find its characteristic polynomial: \begin{align*} \left|\begin{array}{ccccc} 1-\lambda & 0 & 0 & 0 & 2 \\ 0 & 4-\lambda & 0 & 0 & 0 \\ 0 & 0 & 9-\lambda & 0 & 0 \\ 0 & 0 & 0 & -\lambda & 0 \\ 2 & 0 & 0 & 0 & 4-\lambda \end{array}\right| &= (4-\lambda)(9-\lambda)(-\lambda)\left|\begin{array}{cc} 1-\lambda & 2 \\ 2 & 4-\lambda \end{array}\right| \\ & = (4-\lambda)(9-\lambda)(-\lambda) \bigl((1-\lambda)(4-\lambda) - 4\bigr) \\ & = (4-\lambda)(9-\lambda)(-\lambda) \bigl(\lambda^2 - 5 \lambda \bigr) \\ & = -(\lambda-9)(\lambda-5)(\lambda-4)(\lambda)^2 \end{align*} Hence, the eigenvalues of $M^\top M$ are \[ \lambda_1 = 9, \quad \lambda_2 = 5, \quad \lambda_3 = 4, \quad \lambda_4 = 0, \quad \lambda_5 = 0. \] Therefore the singular values of $M$ are $3,$ $\sqrt{5},$ and $2.$ The rank of both $M$ is $3.$ The dimension of the null space of $M$ is $2$. The matrix $\Sigma$, in fact for us now it is $\Sigma^\top,$ is \[ \Sigma = \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\!\right] \]

- (II) Next we need to find an orthogonal $5\times 5$ matrix $V$ such that \[ M^\top M = V D V^\top. \] That is we need to find all the eigenvectors of the matrix $M^\top M$. In this particular case one can almost guess $V$: \[ (M^\top M) V = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 4 & 0 & 0 & 0 \\ 0 & 0 & 9 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 2 & 0 & 0 & 0 & 4 \end{array}\!\right] \left[\!\begin{array}{ccccc} 0 & \frac{1}{\sqrt{5}} & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 & 0 & -\frac{1}{\sqrt{5}} \end{array}\!\right] = \left[\!\begin{array}{ccccc} 0 & \frac{1}{\sqrt{5}} & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 & 0 & -\frac{1}{\sqrt{5}} \end{array}\!\right] \left[\!\begin{array}{rrrrr} 9 & 0 & 0 & 0 & 0 \\ 0 & 5 & 0 & 0 & 0 \\ 0 & 0 & 4 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\!\right] = V D \] Thus \[ V = \left[\!\begin{array}{ccccc} 0 & \frac{1}{\sqrt{5}} & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 & 0 & -\frac{1}{\sqrt{5}} \end{array}\!\right]. \]

- (III) To find a $4\!\times\!4$ orthogonal matrix $U$ we notice that the equality $M V = U \Sigma V^\top$ implies \[ M V = U \Sigma. \] Thus, we calculate \begin{align*} MV & =\left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\!\right] \left[\!\begin{array}{ccccc} 0 & \frac{1}{\sqrt{5}} & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 & 0 & -\frac{1}{\sqrt{5}} \end{array}\!\right] \\ & = \left[\!\begin{array}{rrrrr} 0 & \sqrt{5} & 0 & 0 & 0 \\ 3 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \end{array}\right] \\ & = \left[\!\begin{array}{cccc} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\!\right] \\ & = U \Sigma \end{align*} Thus, the first three columns of $U$ are \[ \left[\!\begin{array}{ccc} 0 & 1 & 0 \\ 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 1 \end{array}\right] \] and the fourth vector is the unit vector in $\operatorname{Nul}(M^\top).$ That is \[ U = \left[\!\begin{array}{cccc} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \]

- To celebrate our work we verify \[ M = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 0 & 0 & 1 & 0 & 0 \\ \frac{1}{\sqrt{5}} & 0 & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ \frac{2}{\sqrt{5}} & 0 & 0 & 0 & \frac{1}{\sqrt{5}} \end{array}\right] \] Or, equivalently, what is easier to verify $MV = U\Sigma$: \[ \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 0 & \frac{1}{\sqrt{5}} & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 & 0 & -\frac{1}{\sqrt{5}} \end{array}\!\right] = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\right]. \]

- Let us state the truncated Singular value decomposition of $M$: \[ M = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] = \left[\!\begin{array}{rrr} 0 & 1 & 0 \\ 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 1 \end{array}\right] \left[\!\begin{array}{ccc} 3 & 0 & 0 \\ 0 & \sqrt{5} & 0 \\ 0 & 0 & 2 \end{array}\right] \left[\!\begin{array}{ccccc} 0 & 0 & 1 & 0 & 0 \\ \frac{1}{\sqrt{5}} & 0 & 0 & 0 & \frac{2}{\sqrt{5}} \\ 0 & 1 & 0 & 0 & 0 \end{array}\right] \] Or, equivalently, what is easier to verify $MV = U\Sigma$: \[ \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{ccc} 0 & \frac{1}{\sqrt{5}} & 0 \\ 0 & 0 & 1 \\ 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & \frac{2}{\sqrt{5}} & 0 \end{array}\!\right] = \left[\!\begin{array}{rrr} 0 & 1 & 0 \\ 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 1 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 \\ 0 & \sqrt{5} & 0 \\ 0 & 0 & 2 \end{array}\right]. \]

- Suggested problems for Section 7.4: 3, 7, 11, 13, 14, 15, 17, 21

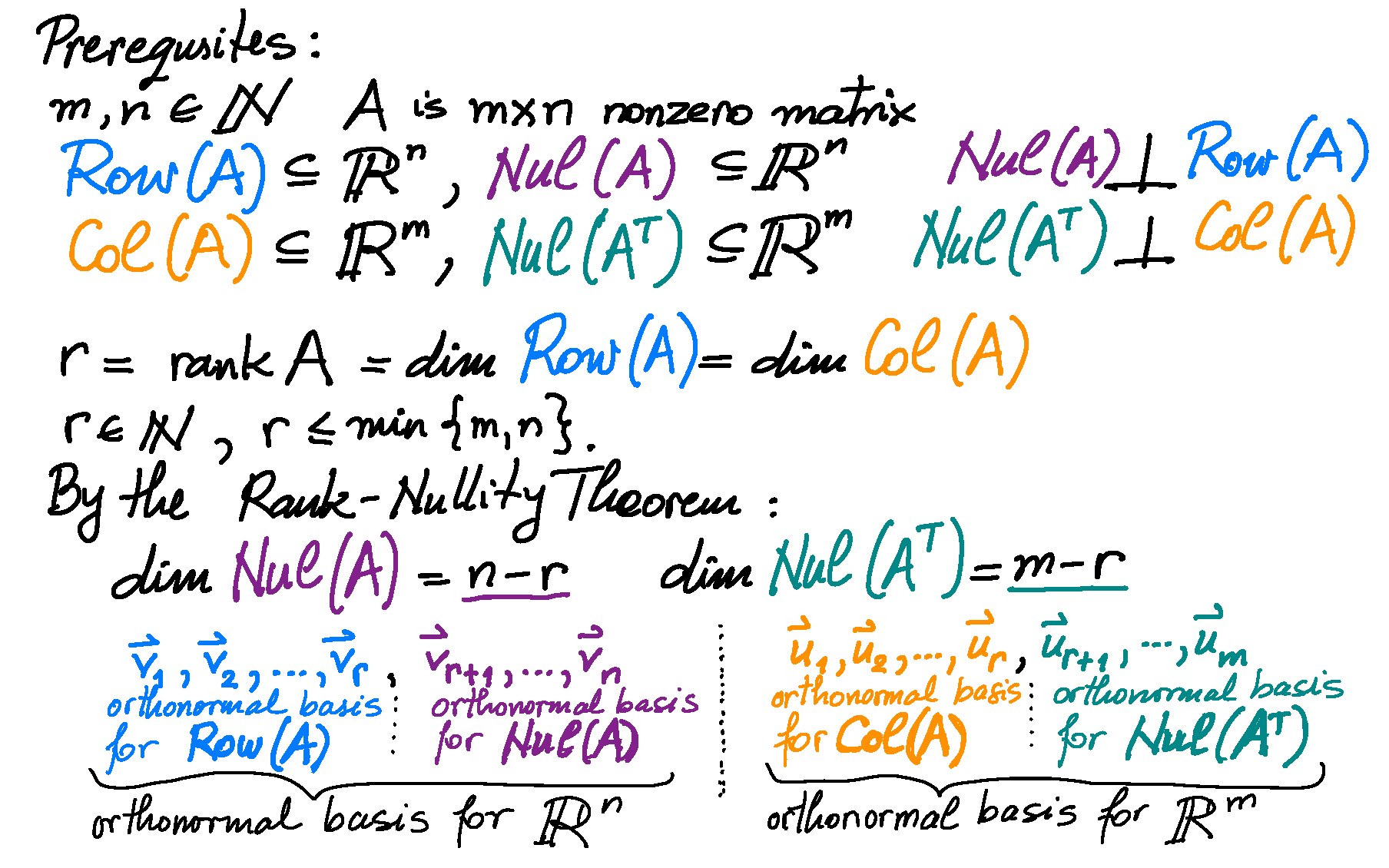

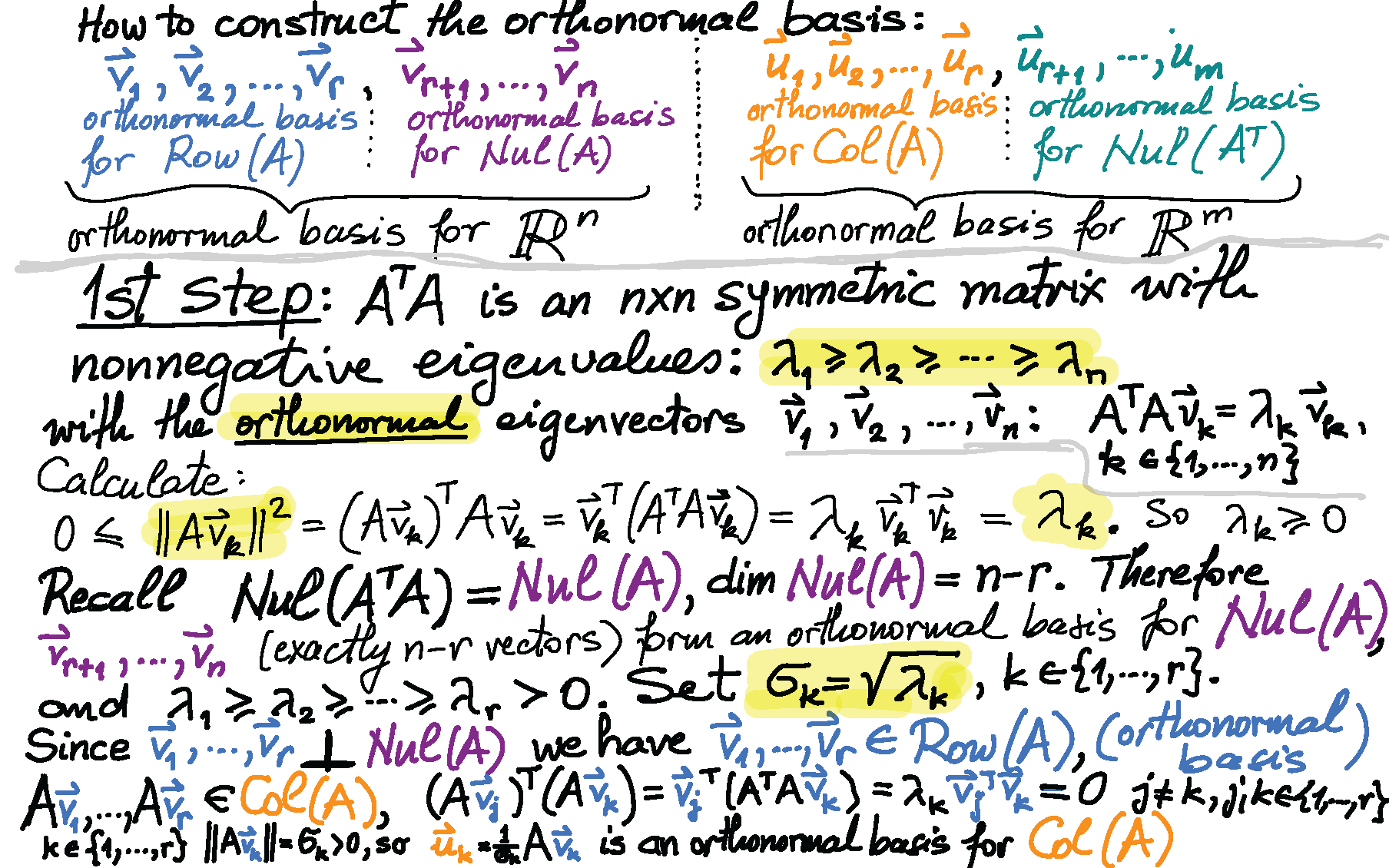

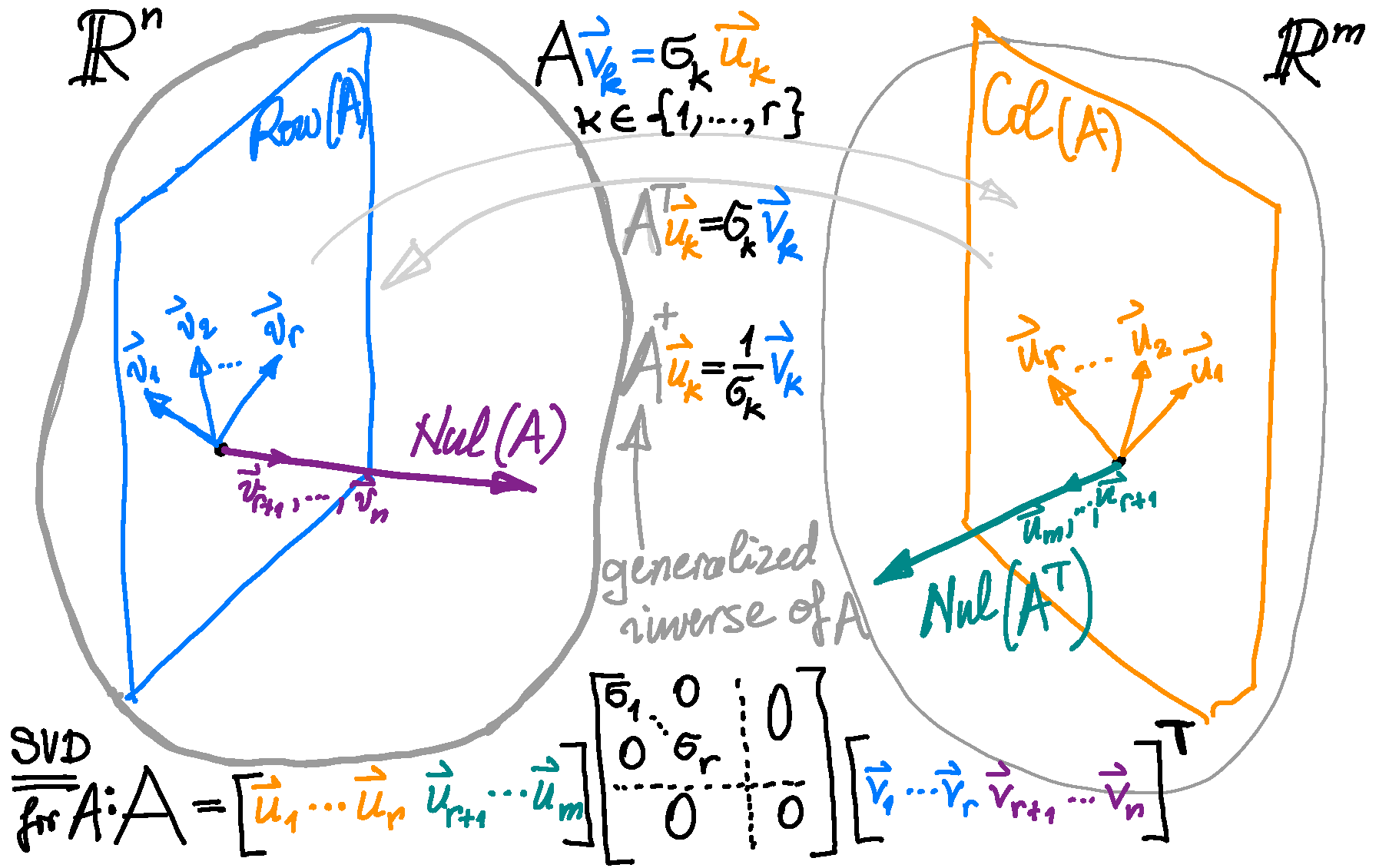

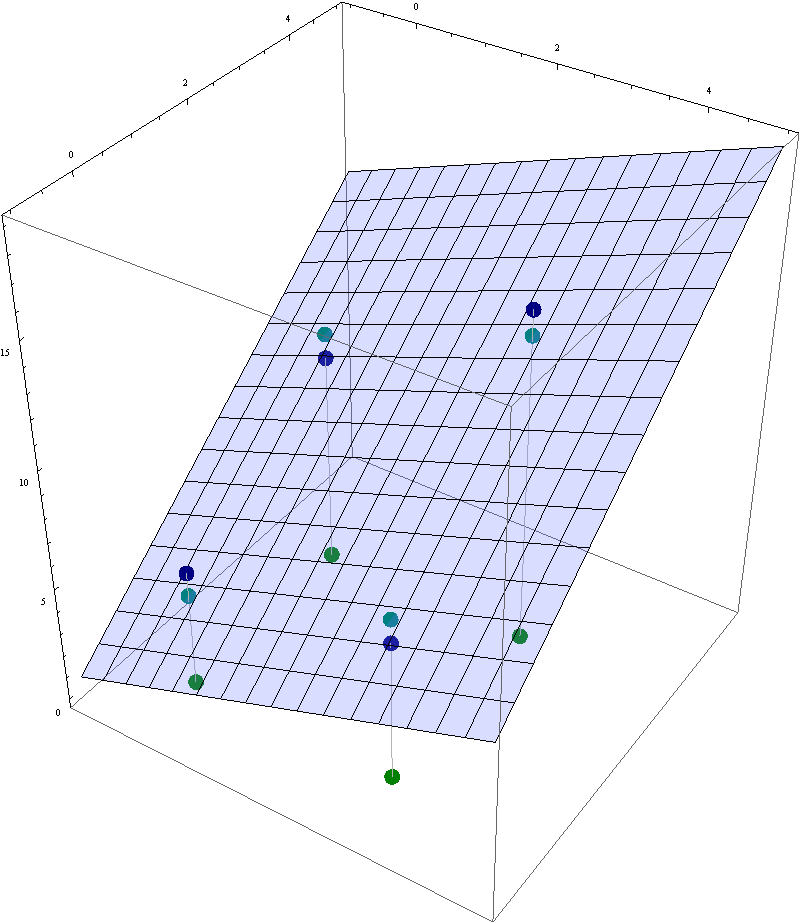

- I post my notes on the Singular Value Decomposition that I wrote in GoodNotes on my iPad.

-

I believe that colors and pictures will help you internalize the process of the construction of the Singular Value Decomposition.

-

Example 1. The following $4\!\times\!5$ matrix is used as an example of Singular Value Decomposition on Wikipedia.

\[

M = \left[\!\begin{array}{rrrrr}

1 & 0 & 0 & 0 & 2 \\

0 & 0 & 3 & 0 & 0 \\

0 & 0 & 0 & 0 & 0 \\

0 & 2 & 0 & 0 & 0

\end{array}\right].

\]

Since this matrix has a lot of zero entries it should not be hard to find its SVD. Remember, SVD is not unique, so if the SVD that we find is different from what is on Wikipedia, it does not mean that it is wrong.

-

In this item I will state an important principle in finding an SVD by hand. Let $A$ be an $m\!\times\!n$ matrix and let

\[

A = U\Sigma V^\top

\]

be an SVD of $A.$ Notice that knowing an SVD of $A$ immediately have found a Singular Value Decomposition of $A^\top$:

\[

A^\top = V \Sigma^\top U^\top.

\]

When you write down the matrix $\Sigma^\top$ you see that the entries on the ``diagonal'' of this matrix are the same as the entries of $\Sigma$. Therefore the singular values of $A$ and $A^\top$ are the same. The only difference is that matrices $U$ and $V$ change positions. Conversely, if we know a Singular Value Decomposition of $A^\top$ we immediately know a Singular Value Decomposition of $A.$

The above observation is particularly important if the positive integer $n$ is "much" larger than the positive integer $m.$ To understand why, think of what is involved in finding an SVD of $A$: We need to find an orthogonal diagonalization of the $n\!\times\!n$ matrix $A^\top A.$ Contrast this with what is involved in finding an SVD of $A^\top$: We need to find an orthogonal diagonalization of the $m\!\times\!m$ matrix $(A^\top)^\top A^\top = AA^\top.$ Since we assume that $m$ is a smaller positive integer, it is easier to find orthogonal diagonalization of $A^\top.$ -

To find a Singular Value Decomposition of $M$ from Wikipedia, we are looking for a $4\!\times\!4$ orthogonal matrix $U$, the $4\!\times\!5$ matrix $\Sigma$ with the singular values of $M$ on the "diagonal", and a $5\!\times\!5$ orthogonal matrix $V$, such that $M = U\Sigma V^\top$.

As explained in the previous item, finding an SVD of $M^\top$ is easier. Thus, we proceed with finding \[ M^\top = V \Sigma^\top U^\top, \] with $U,$ $V$ and $\Sigma$ as above. - (I) To find the singular values and right singular vectors of $M^\top$ we calculate the matrix \[ M M^\top = \left[\!\begin{array}{rrrr} 5 & 0 & 0 & 0 \\ 0 & 9 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 4 \end{array}\right]. \] Clearly the eigenvalues of this matrix in nonincreasing order are $9,$ $5,$ $4$ and $0.$ Thus the singular values of $M$ and $M^\top$ are $3,$ $\sqrt{5},$ and $2.$ The ranks of both $M$ and $M^\top$ are $3.$ The dimension of the nulspace of $M$ is $2$ and the dimension of the nulspace of $M^\top$ is $1$. The matrix $\Sigma$, in fact for us now it is $\Sigma^\top,$ is \[ \Sigma^\top = \left[\!\begin{array}{cccc} 3 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 \\ 0 & 0 & 2 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \end{array}\right] \] The corresponding orthogonal matrix $U$ is \[ U = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \]

- (II) To find a $5\!\times\!5$ orthogonal matrix $V$ we notice that the equality $M^\top = V \Sigma^\top U^\top$ implies \[ M^\top U = V \Sigma^\top. \] Thus, we calculate \[ M^\top U =\left[\!\begin{array}{rrrr} 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 2 \\ 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 2 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 0 & 0 & 2 & 0 \\ 3 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 \end{array}\right] = \left[\!\begin{array}{cccc} 0 & 1/\sqrt{5} & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 2/\sqrt{5} & 0 & 0 \end{array}\right] \left[\!\begin{array}{cccc} 3 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 \\ 0 & 0 & 2 & 0 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \end{array}\right] \] Thus, the first three columns of $V$ are \[ \left[\!\begin{array}{ccc} 0 & 1/\sqrt{5} & 0 \\ 0 & 0 & 1 \\ 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 2/\sqrt{5} & 0 \end{array}\right]. \] Notice that to find these three columns we performed a minimal amount of calculation.

- (III) The next step is to find the remaining two columns of $V.$ Since the first three columns of $V$ form an orthonormal basis for $\operatorname{Row} M$, the remaining two columns of $V$ will be two orthonormal vectors in $\operatorname{Nul} M.$ To find these vectors row-reduce $M$: \[ M = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] \quad \sim \quad \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ \end{array}\right]. \] Thus, the null-space of $M$ is spanned by the orthogonal vectors \[ \left[\!\begin{array}{r} -2 \\ 0 \\ 0 \\ 0 \\ 1 \end{array}\right] \qquad \text{and} \qquad \left[\!\begin{array}{r} 0 \\ 0 \\ 0 \\ 1 \\ 0 \end{array}\right]. \] Finally we have the complete $5\!\times\!5$ matrix $V$ \[ V = \left[\!\begin{array}{ccc} 0 & 1/\sqrt{5} & 0 & -2/\sqrt{5} & 0 \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 1 \\ 0 & 2/\sqrt{5} & 0 & 1/\sqrt{5} & 0 \end{array}\right]. \]

-

In this item I will state an important principle in finding an SVD by hand. Let $A$ be an $m\!\times\!n$ matrix and let

\[

A = U\Sigma V^\top

\]

be an SVD of $A.$ Notice that knowing an SVD of $A$ immediately have found a Singular Value Decomposition of $A^\top$:

\[

A^\top = V \Sigma^\top U^\top.

\]

When you write down the matrix $\Sigma^\top$ you see that the entries on the ``diagonal'' of this matrix are the same as the entries of $\Sigma$. Therefore the singular values of $A$ and $A^\top$ are the same. The only difference is that matrices $U$ and $V$ change positions. Conversely, if we know a Singular Value Decomposition of $A^\top$ we immediately know a Singular Value Decomposition of $A.$

- To celebrate our work we verify \[ M = \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{ccc} 0 & 0 & 1 & 0 & 0 \\ 1/\sqrt{5} & 0 & 0 & 0 & 2/\sqrt{5} \\ 0 & 1 & 0 & 0 & 0 \\ -2/\sqrt{5} & 0 & 0 & 0 & 1/\sqrt{5} \\ 0 & 0 & 0 & 1 & 0 \end{array}\right] \] Or, equivalently, what is easier $MV = U\Sigma$: \[ \left[\!\begin{array}{rrrrr} 1 & 0 & 0 & 0 & 2 \\ 0 & 0 & 3 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 2 & 0 & 0 & 0 \end{array}\right] \left[\!\begin{array}{ccc} 0 & 1/\sqrt{5} & 0 & -2/\sqrt{5} & 0 \\ 0 & 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 1 \\ 0 & 2/\sqrt{5} & 0 & 1/\sqrt{5} & 0 \end{array}\right] = \left[\!\begin{array}{rrrr} 0 & 1 & 0 & 0 \\ 1 & 0 & 0 & 0\\ 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 \end{array}\right] \left[\!\begin{array}{ccccc} 3 & 0 & 0 & 0 & 0 \\ 0 & \sqrt{5} & 0 & 0 & 0 \\ 0 & 0 & 2 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 \end{array}\right]. \]

- I continue with examples started on Thursday.

-

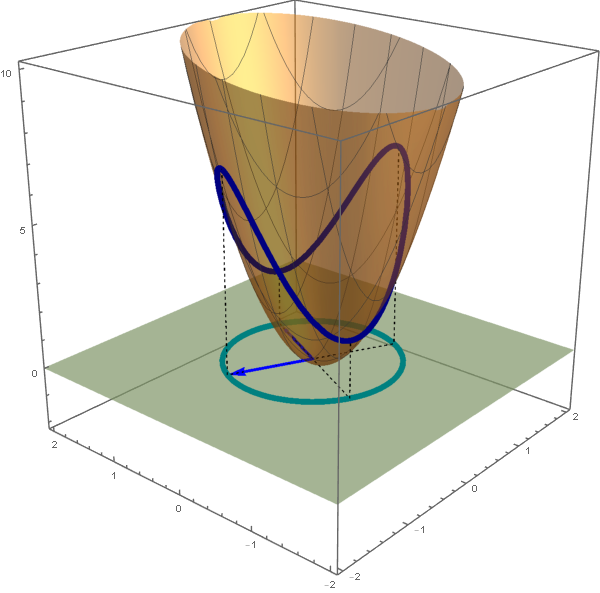

Example 5.

In this item we consider the quadratic form

\begin{align*}

Q(x_1, x_2, x_3) & = 2 x_1^2+2 x_1 x_2 +2 x_1 x_3 +2 x_2^2 + 2 x_2 x_3 + 2 x_3^2 \\

& \qquad \qquad \text{where} \quad x_1, x_2, x_3 \in \mathbb{R}.

\end{align*}

- We have \begin{align*} Q(x_1, x_2, x_3) & = \bigl[ x_1 \ \ x_2 \ \ x_3 \bigr] \left[\! \begin{array}{ccc} 2 & 1 & 1 \\ 1 & 2 & 1 \\ 1 & 1 & 2 \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \\ x_3 \end{array} \!\right] \\ & = 2 x_1^2+2 x_1 x_2 +2 x_1 x_3 +2 x_2^2 + 2 x_2 x_3 + 2 x_3^2 \\ & \qquad \text{where} \quad x_1, x_2, x_3 \in \mathbb{R}. \end{align*}

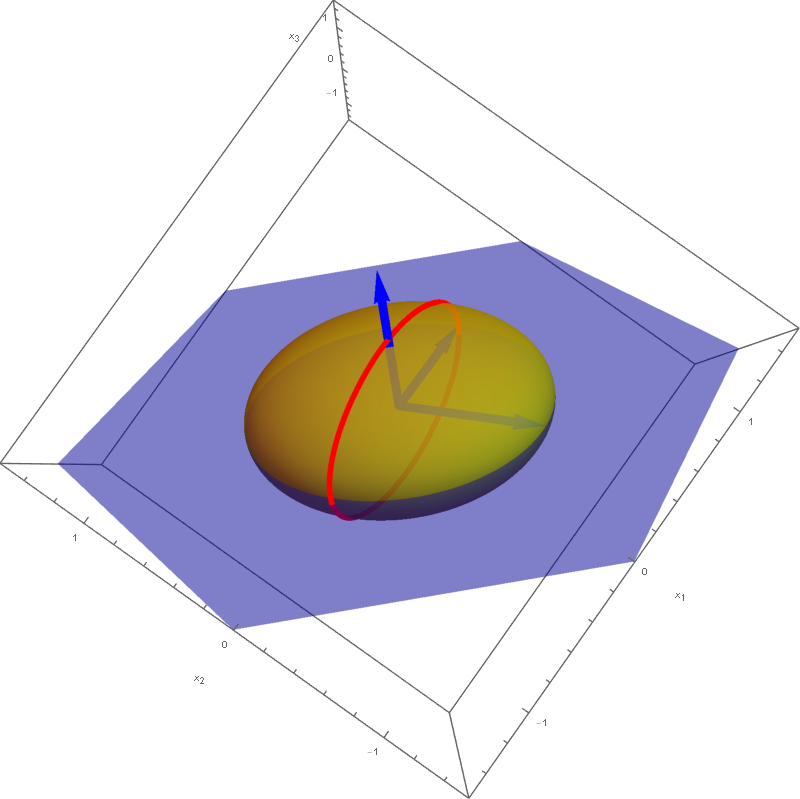

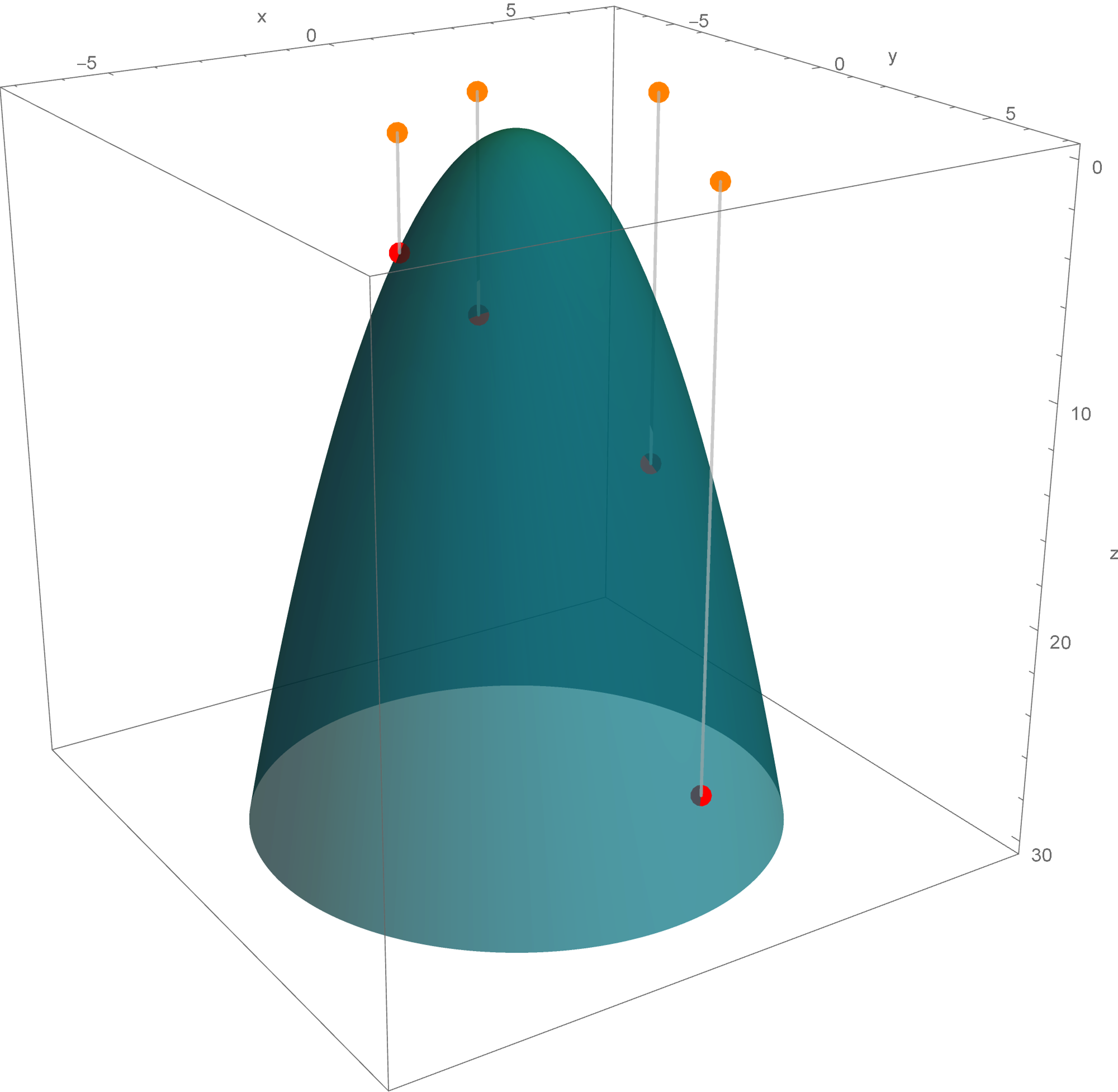

- Clearly the quadratic form $Q$ is not a zero form. To classify $Q$ as positive semidefinite, negative semidefinite, indefinite we orthogonally diagonalize the matrix of this quadratic form: \[ \left[\! \begin{array}{ccc} 2 & 1 & 1 \\ 1 & 2 & 1 \\ 1 & 1 & 2 \end{array} \!\right] = \left[\!\begin{array}{ccc} \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ 0 & -\frac{2}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ \end{array} \!\right] \left[\! \begin{array}{ccc} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 4 \end{array} \!\right] \left[\!\begin{array}{ccc} \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ 0 & -\frac{2}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ \end{array} \!\right]^\top \] Let us introduce two bases \[ \mathcal{B} = \left\{ \left[\! \begin{array}{c} \frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{1}{\sqrt{6}} \\ -\frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array} \!\right] \right\} \qquad \text{and} \qquad \mathcal{E} = \left\{ \left[\! \begin{array}{c} 1 \\ 0 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 1 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 0 \\ 1 \end{array} \!\right] \right\}. \] The above orthogonal diagonalization suggests a very useful change of coordinates: \[ \mathbf{y} = \left[\!\begin{array}{ccc} \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ 0 & -\frac{2}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ \end{array} \!\right]^\top \mathbf{x} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \left[\!\begin{array}{ccc} \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ 0 & -\frac{2}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{6}} & \frac{1}{\sqrt{3}} \\ \end{array} \!\right] \mathbf{y} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}. \] The vector $\mathbf{y}$ is the coordinate vector of $\mathbf{x}$ relative to the basis $\mathcal{B}$, that is $\mathbf{y} = \bigl[\mathbf{x}\bigr]_{\mathcal{B}}.$ With the change of coordinates \[ \mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}, \] the quadratic form $Q$ simplifies as follows \[ 2 x_1^2+2 x_1 x_2 +2 x_1 x_3 +2 x_2^2 + 2 x_2 x_3 + 2 x_3^2 = y_1^2 + y_2^2 + 4 y_3^2. \] The quadratic form $y_1^2 + y_2^2 + 4 y_3^2$ is positive definite since $y_1^2 + y_2^2 + 4 y_3^2 \geq 0$ for all $y_1, y_2, y_3 \in \mathbb{R}$ and $y_1^2 + y_2^2 + 4 y_3^2 = 0$ implies $(y_1,y_2,y_3) = (0,0,0).$ Therefore the given quadratic form $Q(\mathbf{x})$ is also positive definite.

-

The above introduced change of coordinates yields the following set equality

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = c \bigr\} = \left\{ \mathbf{x} \in \mathbb{R}^3 \, : \bigl[ \mathbf{x}\bigr]_{\mathcal{B}} = \left[\!

\begin{array}{c} y_1 \\ y_2 \\ y_3\end{array}

\!\right] \quad \text{and} \quad \, y_1^2 + y_2^2 + 4 y_3^2 = c \right\}

\]

which holds for each $c \in \mathbb{R}.$ Since

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, y_1^2 + y_2^2 + 4 y_3^2 = -1 \bigr\}

\]

is the empty set, the stated set equality with $c = -1$ yields that

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\}

\]

is the empty set.

Since the set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, y_1^2 + y_2^2 + 4 y_3^2 = 0 \bigr\}

\]

is a singleton set consisting of the zero vector, the stated set equality with $c = 0$ yields that

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 0 \bigr\}

\]

is a singleton set consisting of the zero vector.

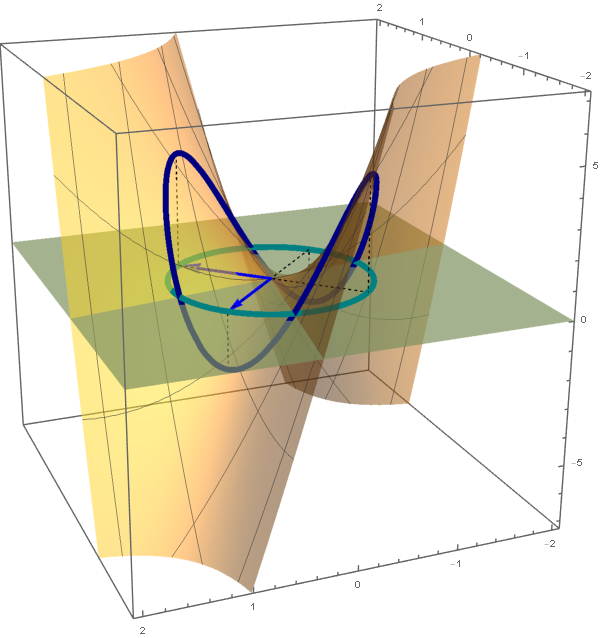

The set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, y_1^2 + y_2^2 + 4 y_3^2 = 1 \bigr\}

\]

is a rotated ellipsoid. This ellipsoid is obtained as the ellipse

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, y_1^2 + 4 y_3^2 = 1, \ y_2 = 0 \bigr\}

= \left\{ \left[\!

\begin{array}{c} \cos\theta \\ 0 \\ \frac{1}{2} \sin\theta \end{array}

\!\right] \ : \ \theta \in [0, 2 \pi) \right\},

\]

which is in the $y_1y_3$-plane, rotates about the $y_3$-axis. The set equality stated at the beginning of this item with $c = 1$ yields that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \ : \ Q(\mathbf{x}) = 1 \bigr\}

\]

is also a rotated ellipsoid obtained as the ellipse

\[

\left\{

(\cos\theta)\left[\!

\begin{array}{c}

\frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}}

\end{array}

\!\right] + \frac{1}{2} (\sin\theta )

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}}

\end{array}

\!\right]

\ : \ \theta \in [0, 2 \pi) \right\},

\]

which is in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}}

\end{array}

\!\right], \quad \left[\!

\begin{array}{c}

\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}}

\end{array}

\!\right],

\]

rotates about the line determined by the vector $\displaystyle \left[\!

\begin{array}{c}

\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}}

\end{array}

\!\right].$ Notice that the intersection of this ellipsoid and the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}}

\end{array}

\!\right] \quad \text{and} \quad \left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ -\frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

is the unit circle

\[

\left\{ (\cos\theta) \left[\!

\begin{array}{c}

\frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}}

\end{array}

\!\right] + (\sin\theta) \left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ -\frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right] \ : \ \theta \in [0, 2 \pi) \right\}.

\]

- Since the change of coordinate matrices $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ and $\displaystyle \underset{\mathcal{B}\leftarrow\mathcal{E}}{P}$ are orthogonal matrices, we have \[ \| \mathbf{y} \|^2 = \mathbf{y}^\top \mathbf{y} = \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right)^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right) = \mathbf{x}^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P}\right)^\top \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x} = \mathbf{x}^\top \mathbf{x} = \|\mathbf{x} \|^2. \] Therefore \[ S = \bigl\{ Q(\mathbf{x}) \, : \, \mathbf{x} \in \mathbb{R}^3, \ \| \mathbf{x} \| = 1 \bigr\} = \bigl\{y_1^2 + y_2^2 + 4 y_3^2 \, : \, \| \mathbf{y} \| = 1, \ \mathbf{y} \in\mathbb{R}^3 \bigr\}. \] Since whenever $y_1^2 + y_2^2 + y_3^2 = 1$ we have \[ 1 = y_1^2 + y_2^2 + y_3^2 \leq y_1^2 + y_2^2 + 4 y_3^2 \leq 4 y_1^2 + 4 y_2^2 + 4 y_3^2 = 4, \] we deduce that $\min S = 1$ and $\max S = 4$. The form $y_1^2 + y_2^2 + 4 y_3^2$ takes the value $1$ when $(y_1,y_2,y_3) = (\cos \theta, \sin \theta, 0)$ and the value $4$ when $(y_1,y_2,y_3) = (0,0,1)$ or $(y_1,y_2,y_3) = (0,0,-1)$. Using the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ we conclude that the minimum value, $1$, of $S$ is taken at the circle on the unit sphere in $\mathbb{R}^3$ given by \[ \left\{ (\cos\theta) \left[\! \begin{array}{c} \frac{1}{\sqrt{2}} \\ 0 \\ -\frac{1}{\sqrt{2}} \end{array} \!\right] + (\sin\theta) \left[\! \begin{array}{c} \frac{1}{\sqrt{6}} \\ -\frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}} \end{array} \!\right] \ : \ \theta \in [0, 2 \pi) \right\}. \] The situation with the maximum value $4$ of $S$ is simpler; this value is taken at two vectors: \[ \bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 5 \bigr\} = \left\{\left[\! \begin{array}{c} \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array} \!\right], - \left[\! \begin{array}{c} \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array} \!\right] \right\}. \]

- Suggested problems for Section 7.3: 1, 3, 5, 9, 11, 12

-

In Section 7.2 in the book the author does not discus quadratic forms with three variables. Here are some animations that might help you understand the quadratic form $x_1^2 + x_2^2 - x_3^2$. Here I show the surfaces in ${\mathbb R}^3$ with equations $x_1^2 + x_2^2 - x_3^2 = c$ for different values of $c$. These surfaces are called hyperboloids. You can read more at the Wikipedia

Hyperboloid page. One sheet hyperboloids are often encountered in art, see these Wikipedia pages Hyperboloid structure and list of hyperboloid structures, do not miss the Gallery at the bottom of the last Wikipedia page.

Place the cursor over the image to start the animation.

Five of the above level surfaces at different level of opacity.

- I continue with examples posted on Thursday.

-

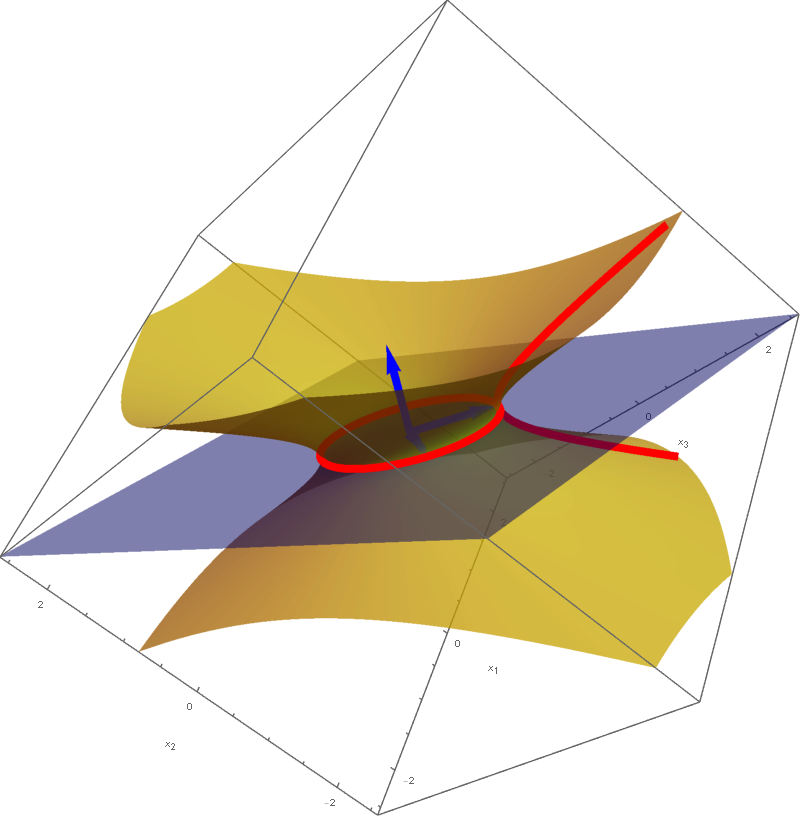

Example 4.

In this item we consider the quadratic form

\[

Q(x_1, x_2, x_3) = 4 x_1 x_2 +2 x_1 x_3 + 3 x_2^2+4 x_2 x_3 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}.

\]

- We have \begin{align*} Q(x_1, x_2, x_3) = \bigl[ x_1 \ \ x_2 \ \ x_3 \bigr] \left[\! \begin{array}{ccc} 0 & 2 & 1 \\ 2 & 3 & 2 \\ 1 & 2 & 0 \\ \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \\ x_3 \end{array} \!\right] & = 4 x_1 x_2 +2 x_1 x_3 + 3 x_2^2+4 x_2 x_3 \\ & \qquad \text{where} \quad x_1, x_2, x_3 \in \mathbb{R}. \end{align*}

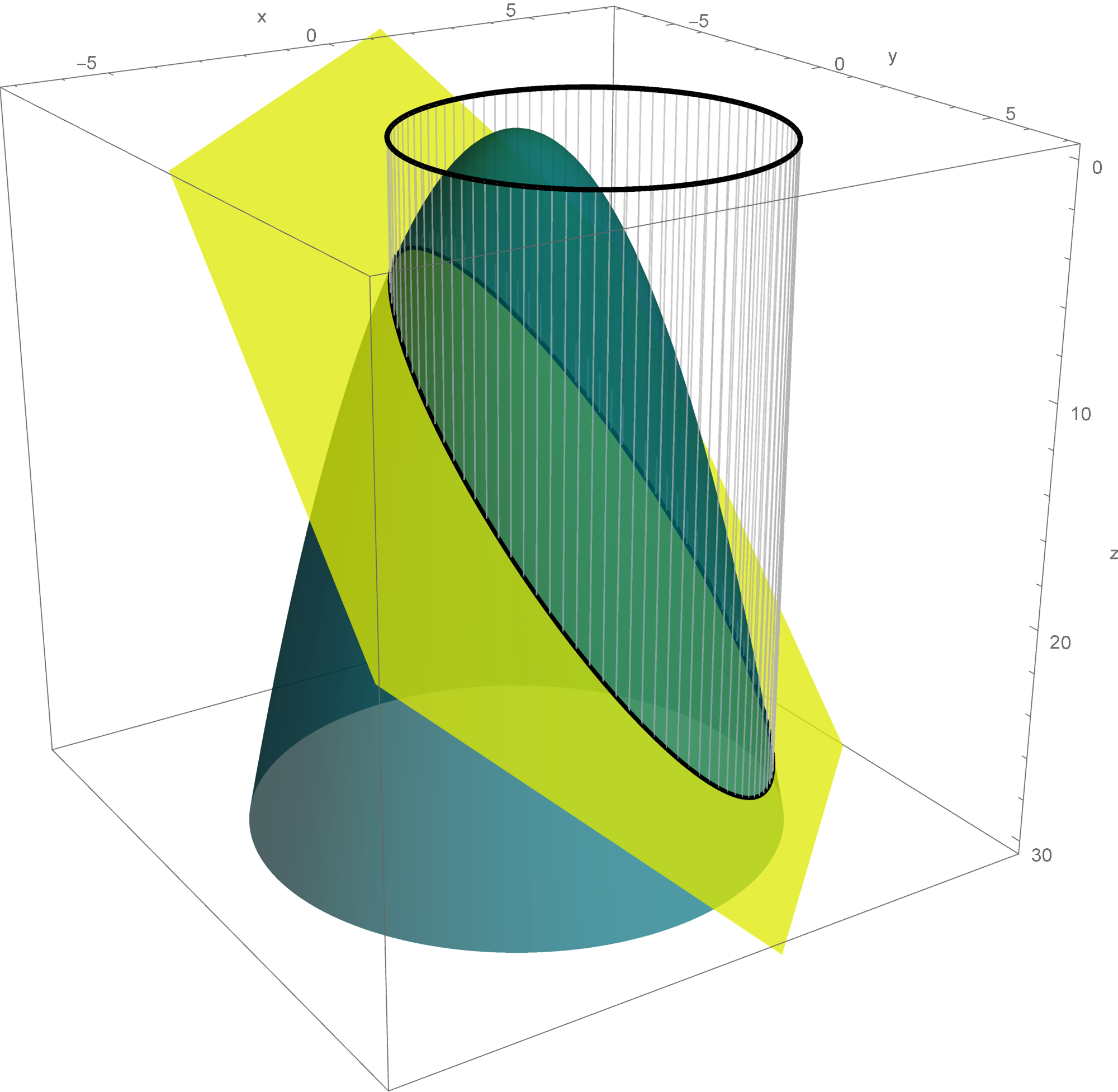

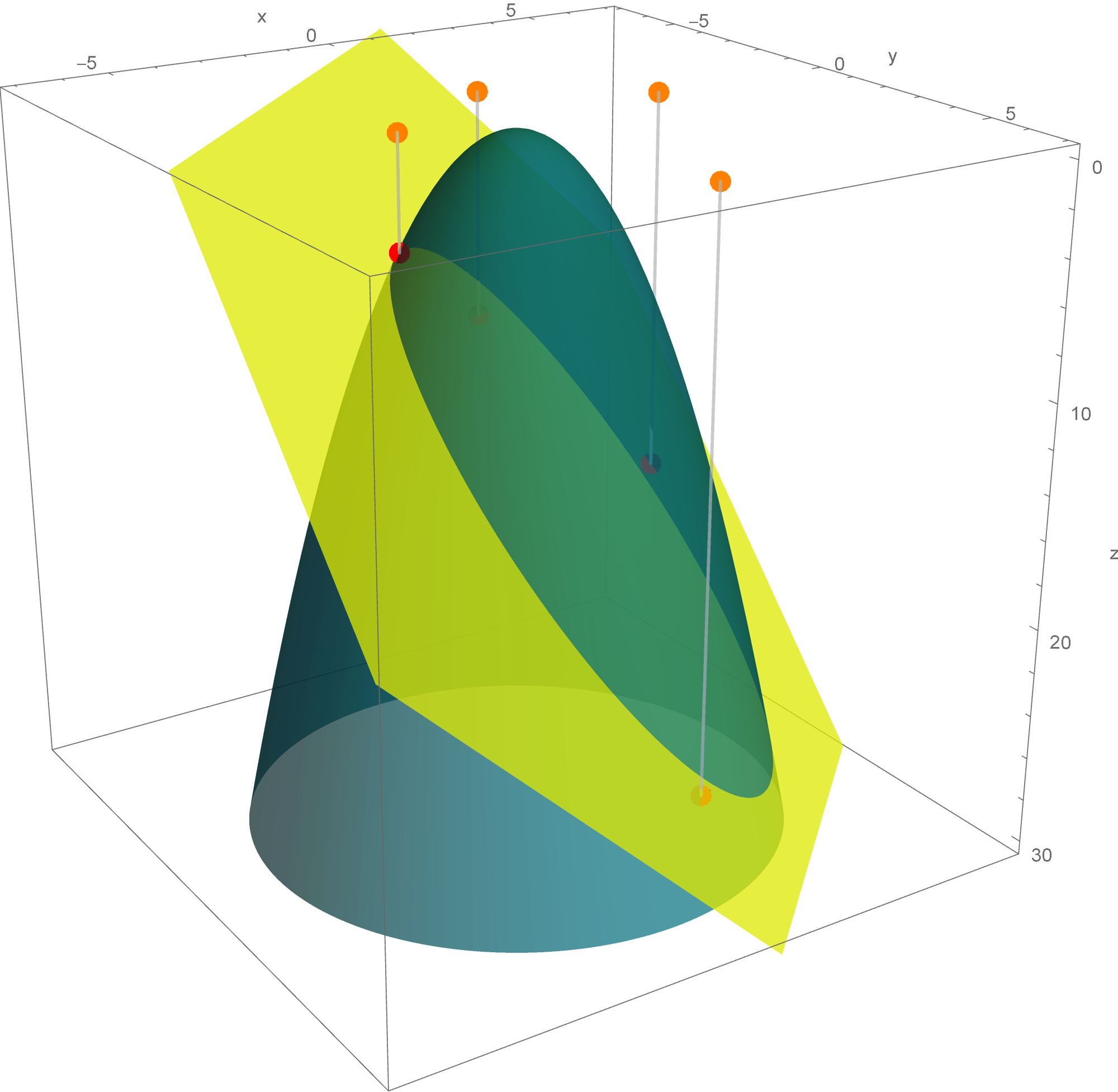

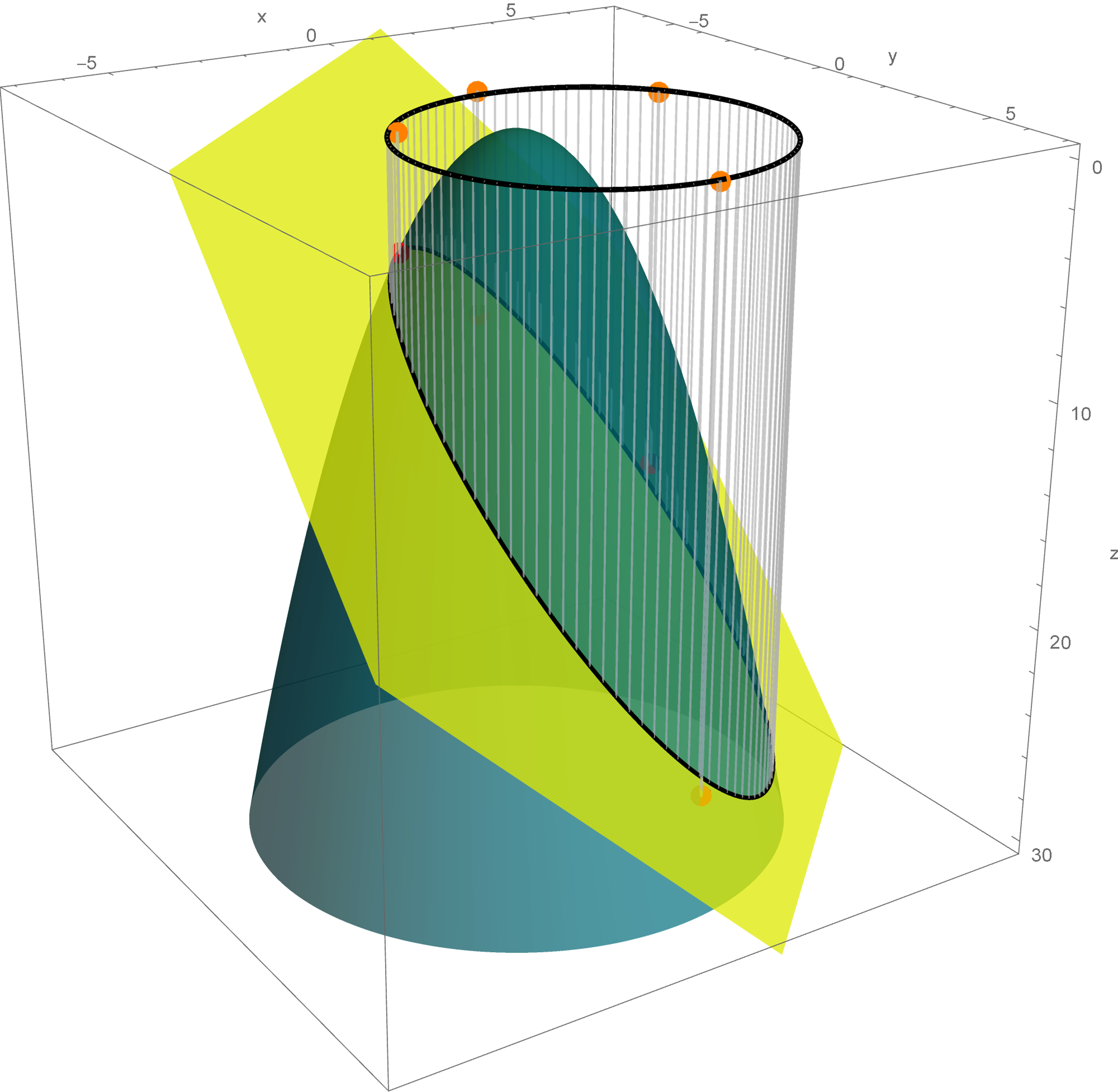

- Clearly the quadratic form $Q$ is not a zero form. To classify $Q$ as positive semidefinite, negative semidefinite, indefinite we orthogonally diagonalize the matrix of this quadratic form: \[ \left[\! \begin{array}{ccc} 0 & 2 & 1 \\ 2 & 3 & 2 \\ 1 & 2 & 0 \\ \end{array} \!\right] = \left[\!\begin{array}{ccc} -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ 0 & -\frac{1}{\sqrt{3}} & \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ \end{array} \!\right] \left[\! \begin{array}{ccc} -1 & 0 & 0 \\ 0 & -1 & 0 \\ 0 & 0 & 5 \end{array} \!\right] \left[\!\begin{array}{ccc} -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ 0 & -\frac{1}{\sqrt{3}} & \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ \end{array} \!\right]^\top \] Let us introduce two bases \[ \mathcal{B} = \left\{ \left[\! \begin{array}{c} -\frac{1}{\sqrt{2}} \\ 0 \\ \frac{1}{\sqrt{2}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}} \end{array} \!\right] \right\} \qquad \text{and} \qquad \mathcal{E} = \left\{ \left[\! \begin{array}{c} 1 \\ 0 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 1 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 0 \\ 1 \end{array} \!\right] \right\}. \] The above orthogonal diagonalization suggests a very useful change of coordinates: \[ \mathbf{y} = \left[\!\begin{array}{ccc} -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ 0 & -\frac{1}{\sqrt{3}} & \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ \end{array} \!\right]^\top \mathbf{x} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \left[\!\begin{array}{ccc} -\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ 0 & -\frac{1}{\sqrt{3}} & \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{2}} & \frac{1}{\sqrt{3}} & \frac{1}{\sqrt{6}} \\ \end{array} \!\right] \mathbf{y} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}. \] The coordinates $\mathbf{y}$ are the coordinates of $\mathbf{x}$ relative to the basis $\mathcal{B}$, that is $\mathbf{y} = \bigl[\mathbf{x}\bigr]_{\mathcal{B}}.$ With the change of coordinates \[ \mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}, \] the quadratic form $Q$ simplifies as follows \[ 4 x_1 x_2 +2 x_1 x_3 + 3 x_2^2+4 x_2 x_3 = - y_1^2 - y_2^2 + 5 y_3^2. \] Clearly the quadratic form $- y_1^2 - y_2^2 + 5 y_3^2$ is an indefinite form taking the value $-1$ at $(y_1,y_2,y_3) = (1,0,0)$ and the value $5$ at $(y_1,y_2,y_3) = (0,0,1).$

-

The above introduced change of coordinates yields the following set equality

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = c \bigr\} = \left\{ \mathbf{x} \in \mathbb{R}^3 \, : \bigl[ \mathbf{x}\bigr]_{\mathcal{B}} = \left[\!

\begin{array}{c} y_1 \\ y_2 \\ y_3\end{array}

\!\right] \quad \text{and} \quad \, - y_1^2 - y_2^2 + 5 y_3^2 = c \right\}

\]

which holds for each $c \in \mathbb{R}.$

Since the set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - y_1^2 - y_2^2 + 5 y_3^2 = 0 \bigr\}

\]

is a rotated cone, the set equality stated at the beginning of this item with $c=0$ implies that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 0 \bigr\}

\]

is also a rotated cone. This cone obtained by the rotation of the line spanned by the vector

\[

\sqrt{5} \left[\!

\begin{array}{c}

-\frac{1}{\sqrt{2}} \\ 0 \\ \frac{1}{\sqrt{2}}

\end{array}

\!\right] +

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

about the line spanned by the vector

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right].

\]

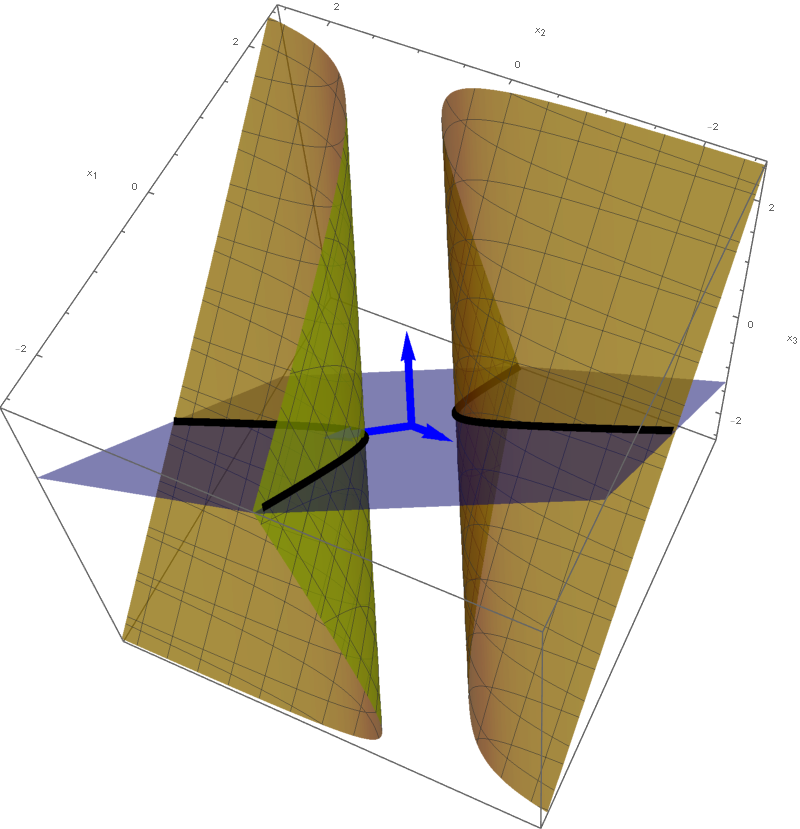

The set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - y_1^2 - y_2^2 + 5 y_3^2 = 1 \bigr\}

\]

is a rotated two sheet hyperboloid. This two sheet hyperboloid is obtained as the hyperbola $-y_1^2 + 5 y_3^2 = 1, y_2 = 0$ rotates about the $y_3$-axis. Consequently, the set equality stated at the beginning of this item with $c=1$ implies that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 1 \bigr\}

\]

is also a rotated two sheet hyperboloid. This hyperboloid is obtained as a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

-\frac{1}{\sqrt{2}} \\ 0 \\ \frac{1}{\sqrt{2}}

\end{array}

\!\right], \quad

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

rotates about the vector

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right].

\]

The set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - y_1^2 - y_2^2 + 5 y_3^2 = 1 \bigr\}

\]

is a rotated two sheet hyperboloid. This two sheet hyperboloid is obtained as the hyperbola $-y_1^2 + 5 y_3^2 = 1, y_2 = 0$ rotates about the $y_3$-axis. Consequently, the set equality stated at the beginning of this item with $c=1$ implies that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 1 \bigr\}

\]

is also a rotated two sheet hyperboloid. This hyperboloid is obtained as a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

-\frac{1}{\sqrt{2}} \\ 0 \\ \frac{1}{\sqrt{2}}

\end{array}

\!\right], \quad

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

rotates about the vector

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right].

\]

The set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - y_1^2 - y_2^2 + 5 y_3^2 = -1 \bigr\}

\]

is a rotated one sheet hyperboloid. This hyperboloid is obtained as the hyperbola $-y_2^2 + 5 y_3^2 = -1, y_1 = 0$ rotates about $y_3$-axis. The set equality stated at the beginning of this item with $c=-1$ implies that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\}

\]

is also a rotated one sheet hyperboloid. This hyperboloid is obtained as a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}}

\end{array}

\!\right], \quad

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

rotates about the vector

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right].

\]

The set

\[

\bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - y_1^2 - y_2^2 + 5 y_3^2 = -1 \bigr\}

\]

is a rotated one sheet hyperboloid. This hyperboloid is obtained as the hyperbola $-y_2^2 + 5 y_3^2 = -1, y_1 = 0$ rotates about $y_3$-axis. The set equality stated at the beginning of this item with $c=-1$ implies that the set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\}

\]

is also a rotated one sheet hyperboloid. This hyperboloid is obtained as a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}}

\end{array}

\!\right], \quad

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right]

\]

rotates about the vector

\[

\left[\!

\begin{array}{c}

\frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}}

\end{array}

\!\right].

\]

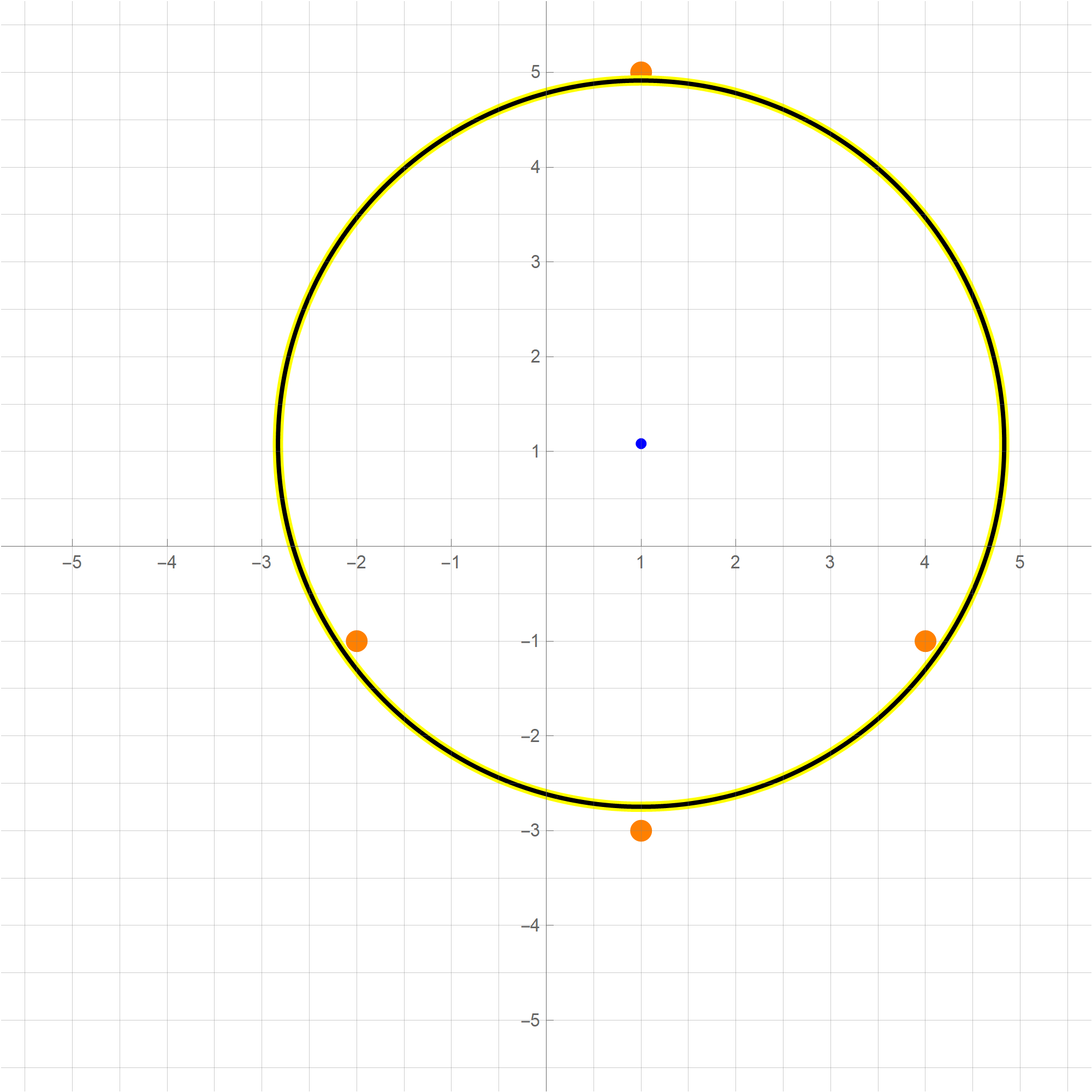

- Since the change of coordinate matrices $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ and $\displaystyle \underset{\mathcal{B}\leftarrow\mathcal{E}}{P}$ are orthogonal we have \[ \| \mathbf{y} \|^2 = \mathbf{y}^\top \mathbf{y} = \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right)^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right) = \mathbf{x}^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P}\right)^\top \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x} = \mathbf{x}^\top \mathbf{x} = \|\mathbf{x} \|^2. \] Therefore \[ S = \bigl\{ Q(\mathbf{x}) \, : \, \mathbf{x} \in \mathbb{R}^3, \ \| \mathbf{x} \| = 1 \bigr\} = \bigl\{ - y_1^2 - y_2^2 + 5 y_3^2 \, : \, \mathbf{y} = 1, \ \mathbf{y} \in\mathbb{R}^3 \bigr\}. \] Since whenever $y_1^2 + y_2^2 + y_3^2 = 1$ we have \[ -1 = -1 y_1^2 - 1 y_2^2 - 1 y_3^2 \leq - y_1^2 - y_2^2 + 5 y_3^2 \leq 5 y_1^2 + 5 y_2^2 + 5 y_3^2 = 5 \] wededuce that $\min S = -1$ and $\max S = 5$. The form $- y_1^2 - y_2^2 + 5 y_3^2$ takes the value $-1$ when $(y_1,y_2,y_3) = (\cos \theta, \sin \theta, 0)$ and the value $5$ when $(y_1,y_2,y_3) = (0,0,1)$ or $(y_1,y_2,y_3) = (0,0,-1)$. Using the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ we conclude that: The minimum value $-1$ is taken at the circle on the unit sphere in $\mathbb{R}^3$. That is \[ \bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \left\{ (\cos \theta) \left[\! \begin{array}{c} -\frac{1}{\sqrt{2}} \\ 0 \\ \frac{1}{\sqrt{2}} \end{array} \!\right] + (\sin \theta) \left[\! \begin{array}{c} \frac{1}{\sqrt{3}} \\ -\frac{1}{\sqrt{3}} \\ \frac{1}{\sqrt{3}} \end{array} \!\right] \ : \ \theta \in [0, 2\pi) \right\}. \] The situation with the maximum value $5$ is simpler, this value is taken at two vectors: \[ \bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 5 \bigr\} = \left\{\left[\! \begin{array}{c} \frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}} \end{array} \!\right], - \left[\! \begin{array}{c} \frac{1}{\sqrt{6}} \\ \frac{2}{\sqrt{6}} \\ \frac{1}{\sqrt{6}} \end{array} \!\right] \right\}. \]

-

In the several items below we will consider several specific quadratic forms $Q$ and answer the following questions four questions:

- Write the quadratic form $Q$ using a symmetric matrix $A$ as $Q(\mathbf{x}) = \mathbf{x}^\top\!A \mathbf{x}$ where $\mathbf{x}\in \mathbb{R}^n.$

- Classify $Q$ using the quadruplicity stated in the post on Tuesday: positive semidefinite, negative semidefinite, indefinite. Don't forget to state whether the form is positive definite or negative definite or not.

- Give a detailed description of the sets \[ \bigl\{ \mathbf{x} \in \mathbb{R}^n \, : \, Q(\mathbf{x}) = -1 \bigr\}, \quad \bigl\{ \mathbf{x} \in \mathbb{R}^n \, : \, Q(\mathbf{x}) = 0 \bigr\}, \quad \bigl\{ \mathbf{x} \in \mathbb{R}^n \, : \, Q(\mathbf{x}) = 1 \bigr\}. \]

- Consider the set of real numbers \[ S = \bigl\{ Q(\mathbf{x}) \, : \ \mathbf{x}\in \mathbb{R}^n, \ \ \| \mathbf{x} \| = 1 \bigr\}. \] Determine $\min S$ and $\max S$ and describe the sets \[ \bigl\{ \mathbf{x} \in \mathbb{R}^n \, : \ \| \mathbf{x} \| = 1, \ \ Q(\mathbf{x}) = \min S \bigr\}, \qquad \bigl\{ \mathbf{x} \in \mathbb{R}^n \, : \ \| \mathbf{x} \| = 1, \ \ Q(\mathbf{x}) = \max S \bigr\}. \]

-

Example 1.

In this item we consider the quadratic form

\[

Q(x_1,x_2) = 6 x_1^2 - 4 x_2 x_1 + 3 x_2^2 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}.

\]

- We have \[ Q(x_1,x_2) = \bigl[ x_1 \ \ x_2 \bigr] \left[\! \begin{array}{cc} 6 & -2 \\ -2 & 3 \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \end{array} \!\right] = 6 x_1^2 - 4 x_2 x_1 + 3 x_2^2 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}. \]

-

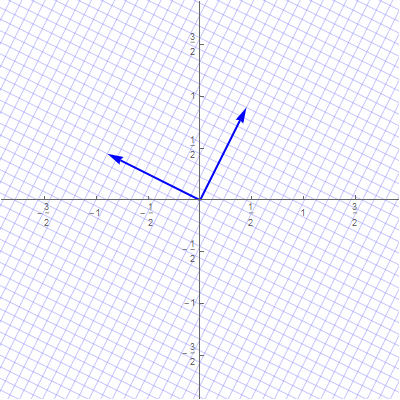

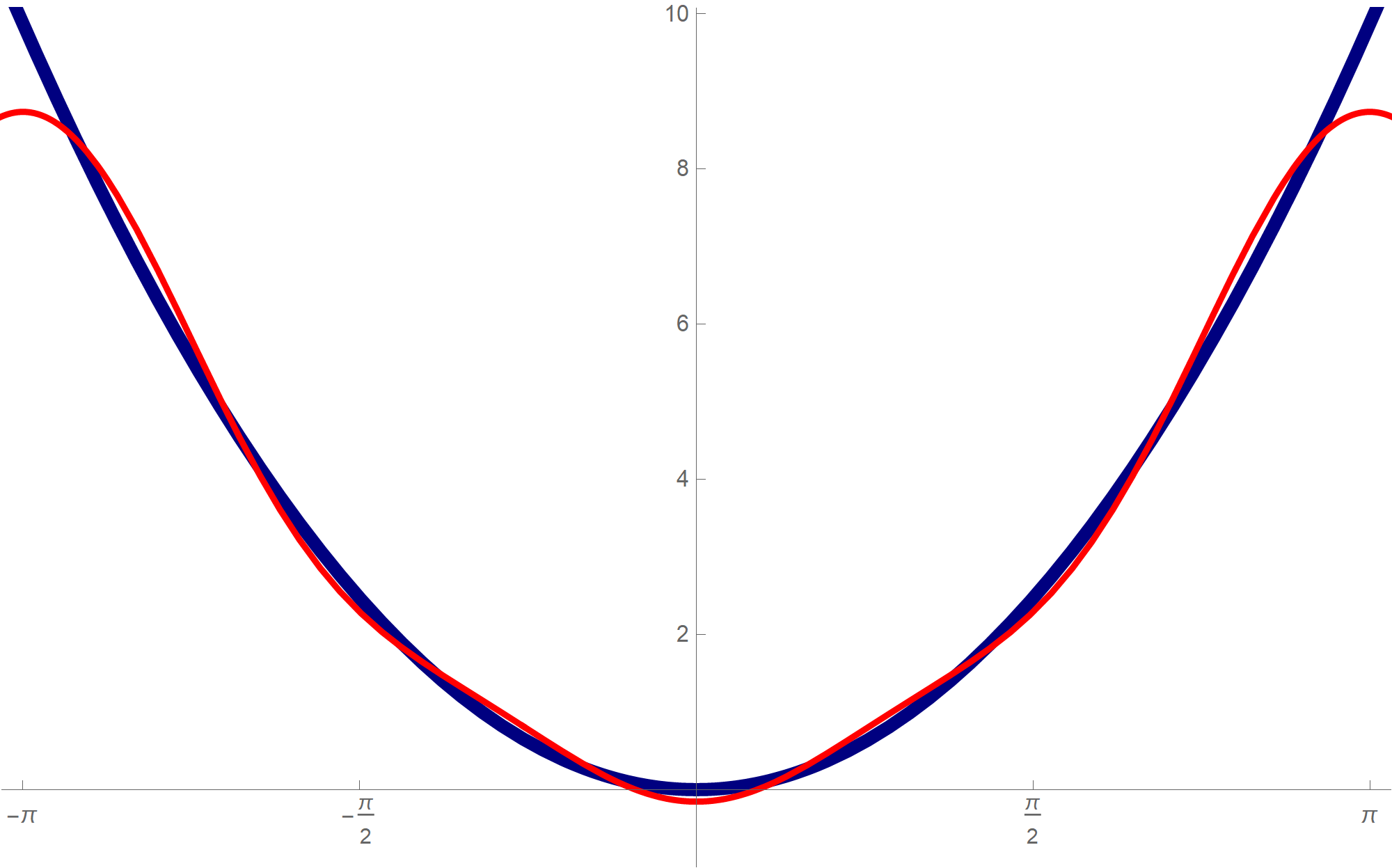

Clearly the quadratic form $Q$ is not a zero form. To classify $Q$ as positive semidefinite, negative semidefinite, indefinite we orthogonally diagonalize the matrix of this quadratic form:

\[

\left[\!

\begin{array}{cc}

6 & -2 \\

-2 & 3

\end{array}

\!\right] = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{5}} & -\frac{2}{\sqrt{5}} \\

\frac{2}{\sqrt{5}} & \frac{1}{\sqrt{5}}

\end{array}

\!\right] \left[\!

\begin{array}{cc}

2 & 0 \\

0 & 7

\end{array}

\!\right] \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{5}} & -\frac{2}{\sqrt{5}} \\

\frac{2}{\sqrt{5}} & \frac{1}{\sqrt{5}}

\end{array}

\!\right]^\top

\]

Let us introduce two bases

\[

\mathcal{B} = \left\{ \left[\!

\begin{array}{c}

\frac{1}{\sqrt{5}} \\

\frac{2}{\sqrt{5}}

\end{array}

\!\right], \left[\!

\begin{array}{c}

-\frac{2}{\sqrt{5}} \\

\frac{1}{\sqrt{5}}

\end{array}

\!\right] \right\} \qquad \text{and} \qquad

\mathcal{E} = \left\{ \left[\!

\begin{array}{c} 1 \\ 0 \end{array}

\!\right], \left[\!

\begin{array}{c}

0 \\ 1

\end{array}

\!\right] \right\}.

\]

The above orthogonal diagonalization suggests a very useful change of coordinates:

\[

\mathbf{y} = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{5}} & -\frac{2}{\sqrt{5}} \\

\frac{2}{\sqrt{5}} & \frac{1}{\sqrt{5}}

\end{array}

\!\right]^\top \mathbf{x} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{5}} & -\frac{2}{\sqrt{5}} \\

\frac{2}{\sqrt{5}} & \frac{1}{\sqrt{5}}

\end{array}

\!\right] \mathbf{y} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}.

\]

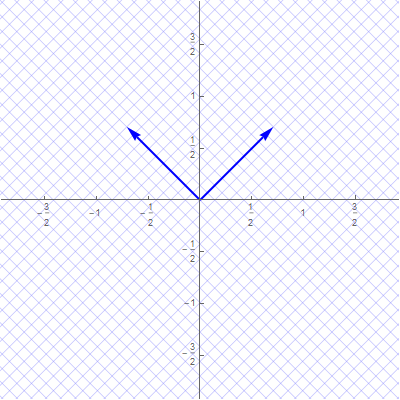

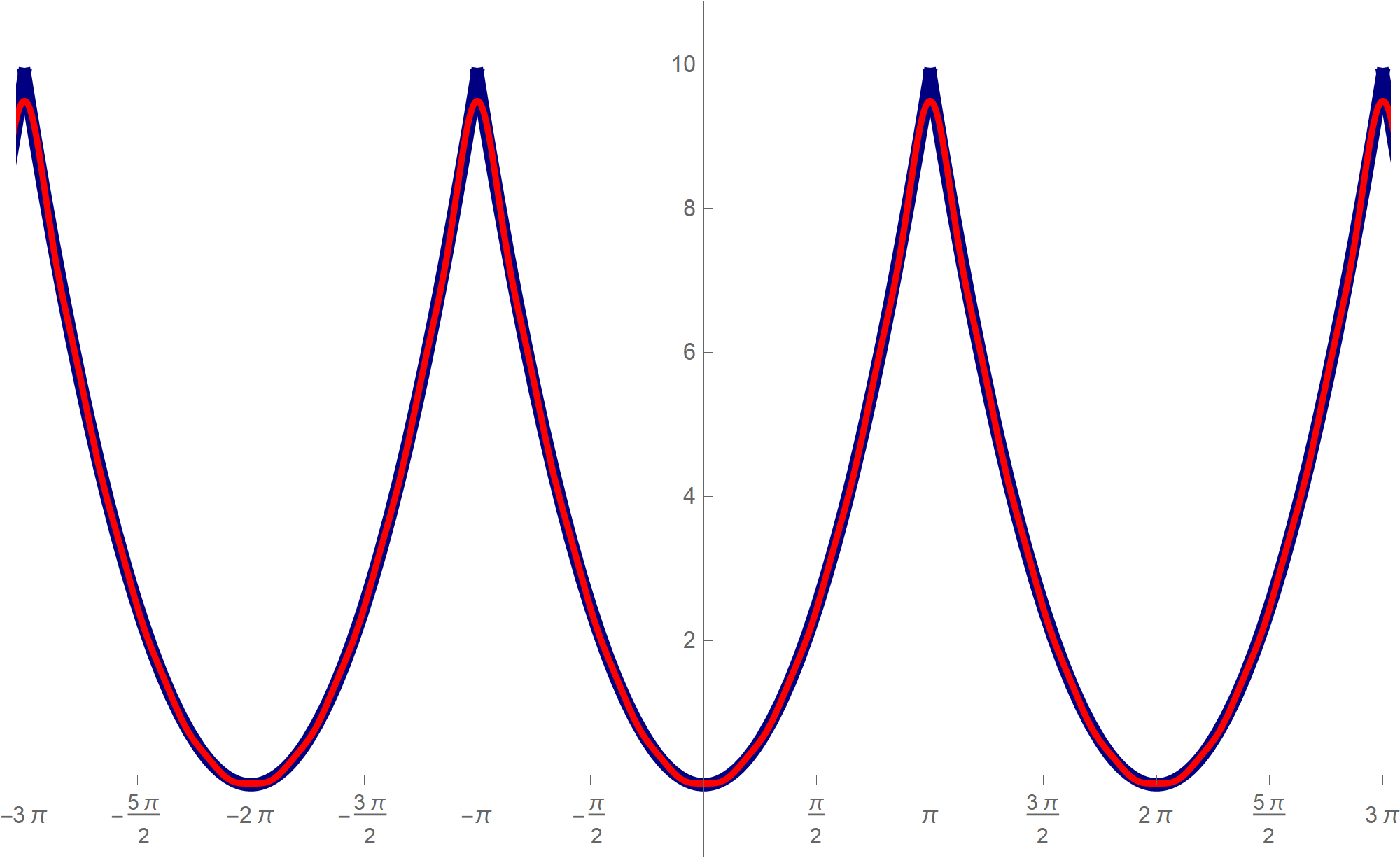

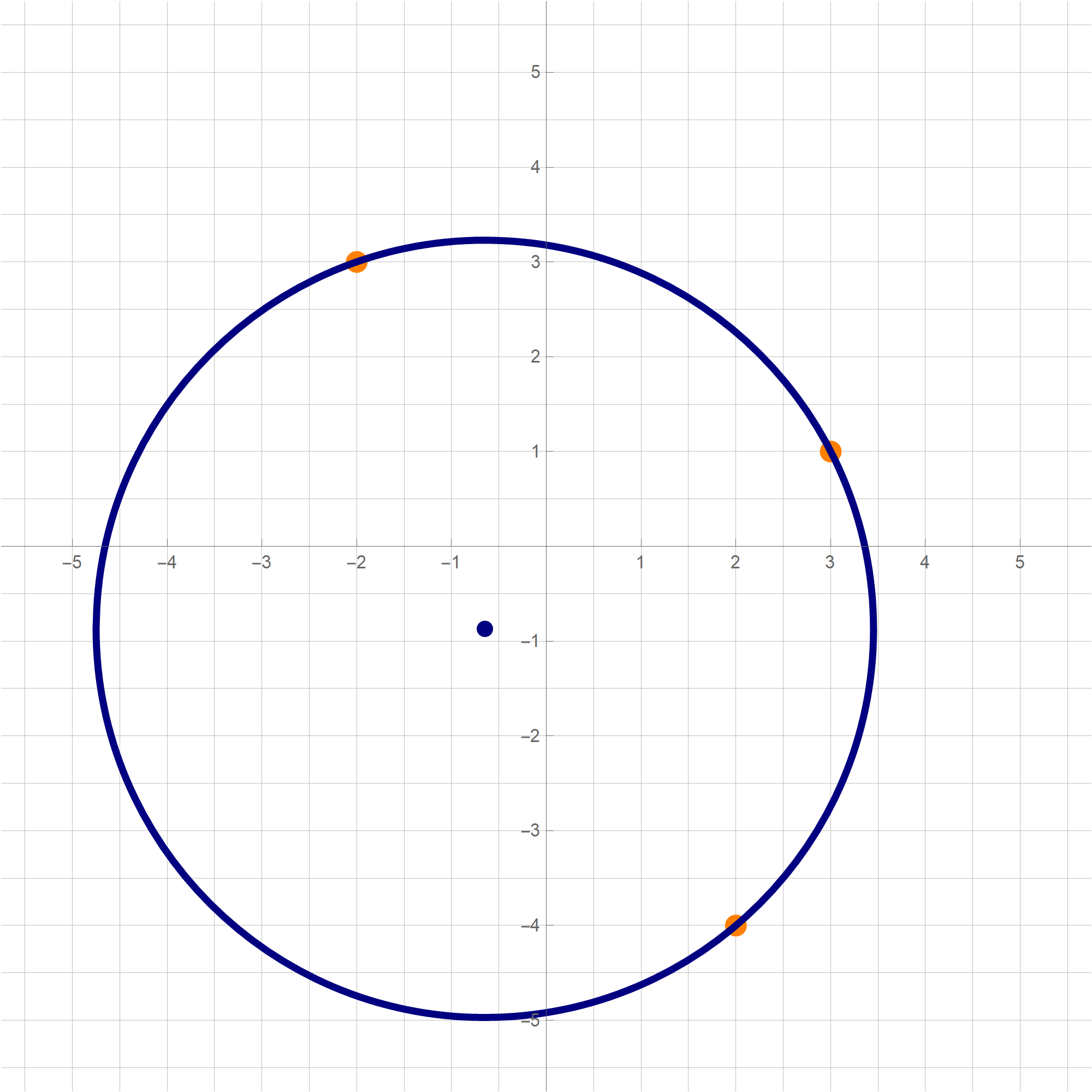

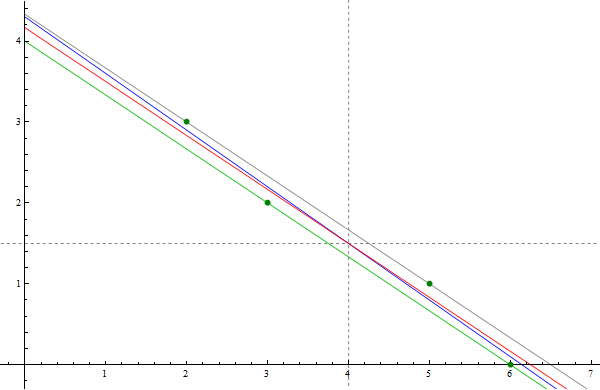

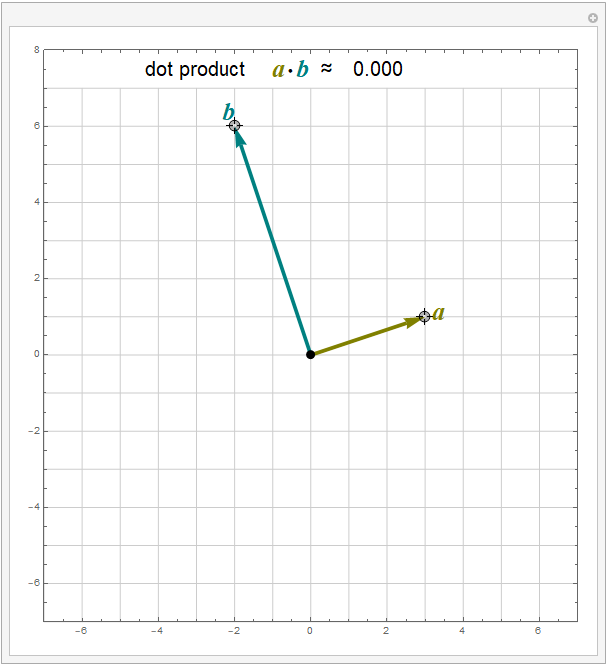

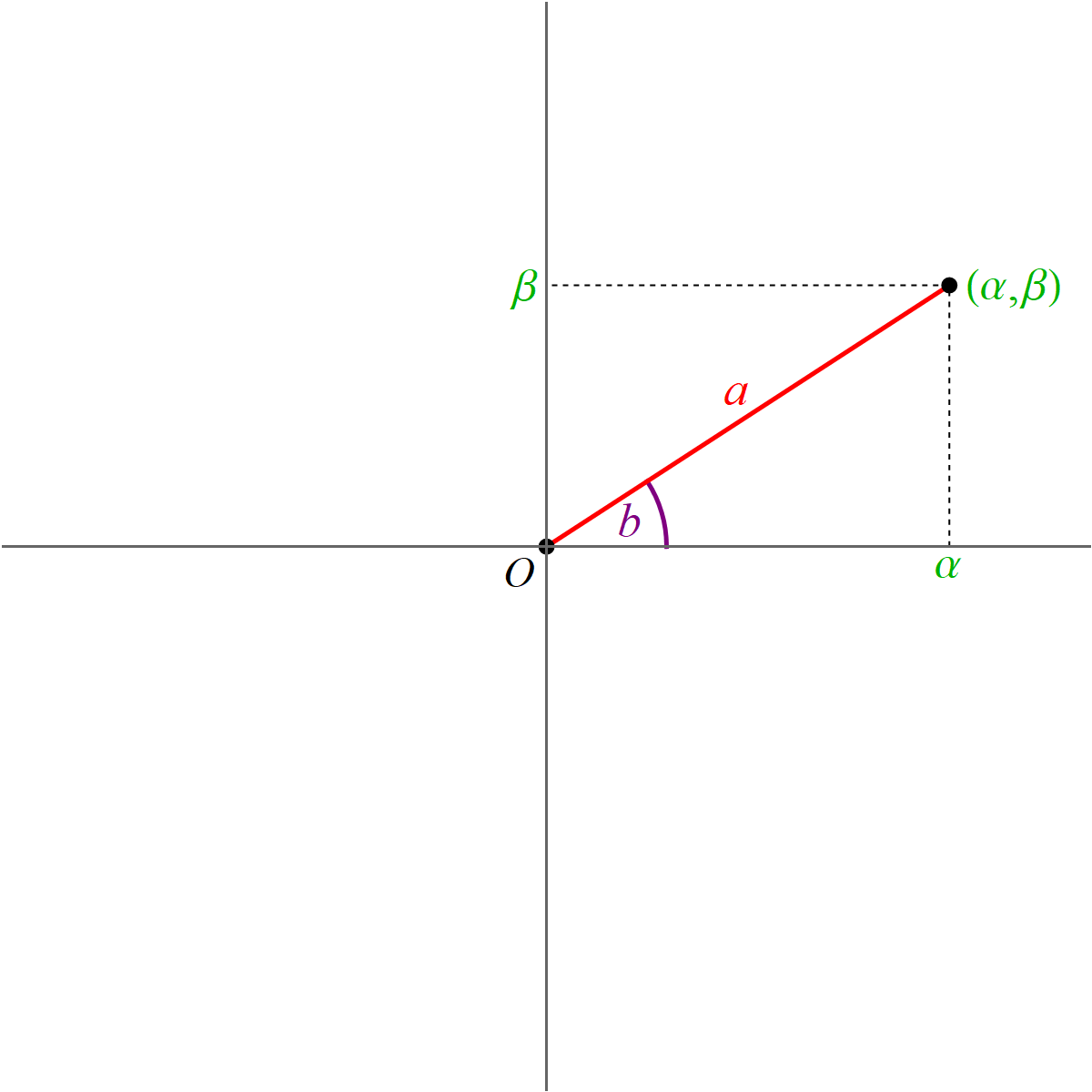

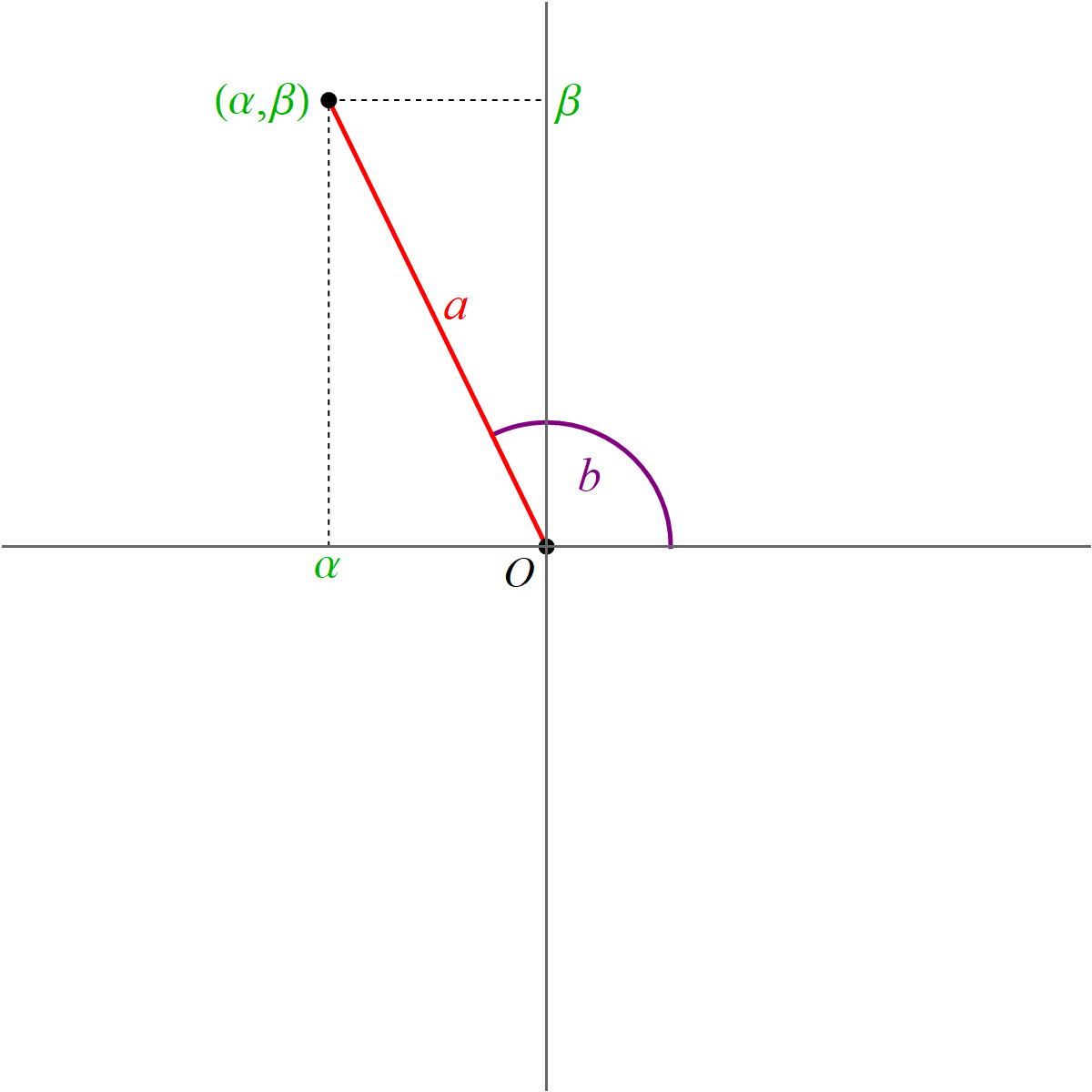

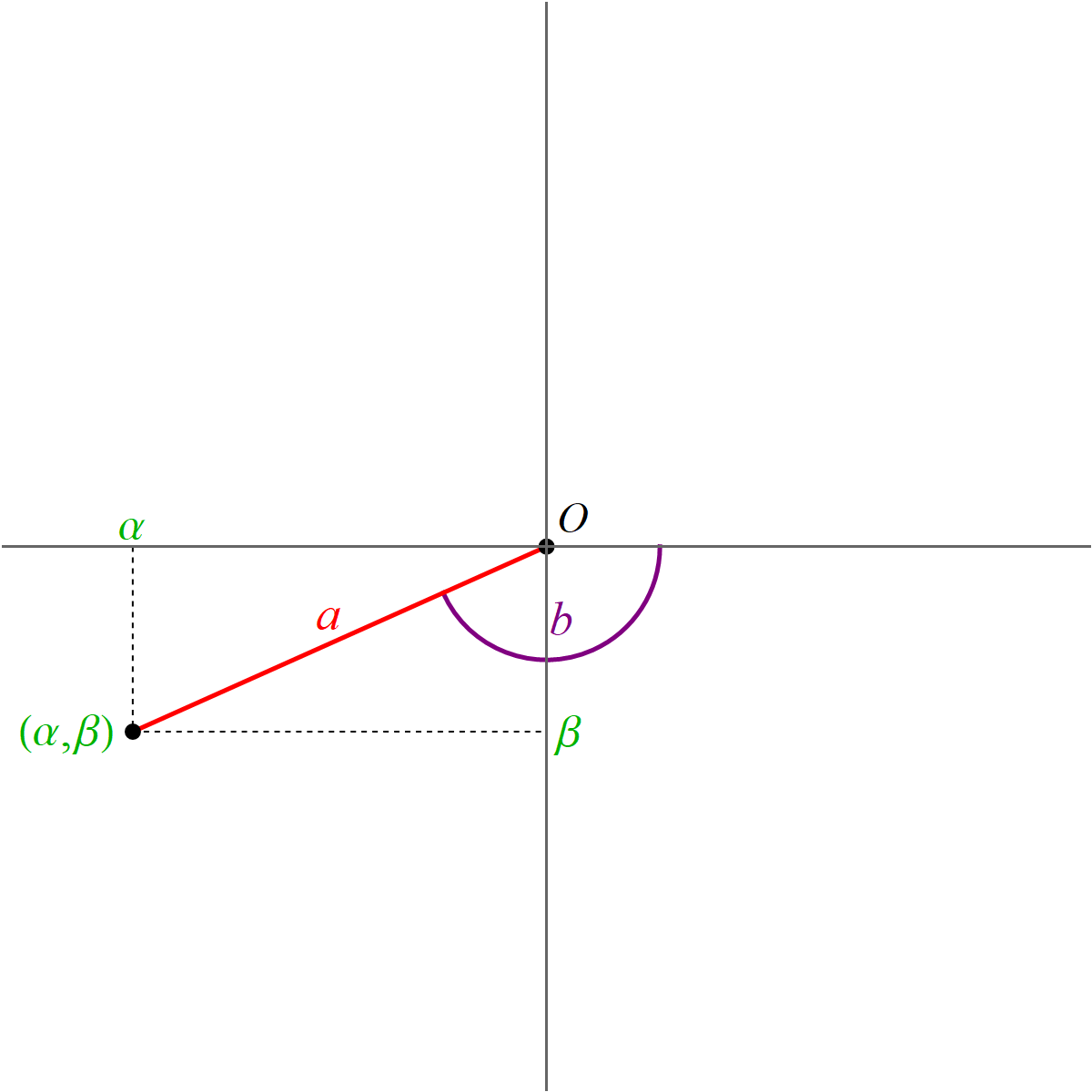

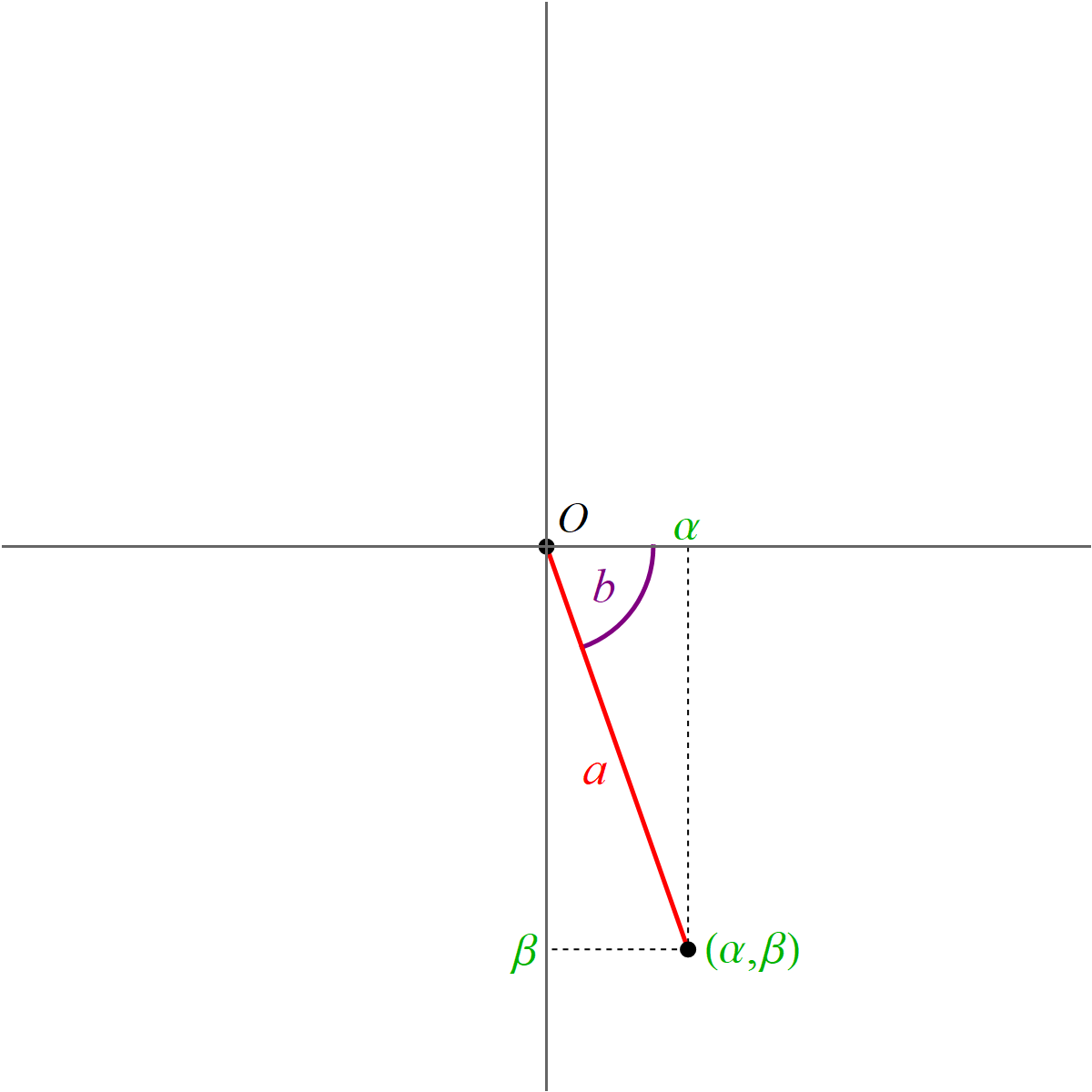

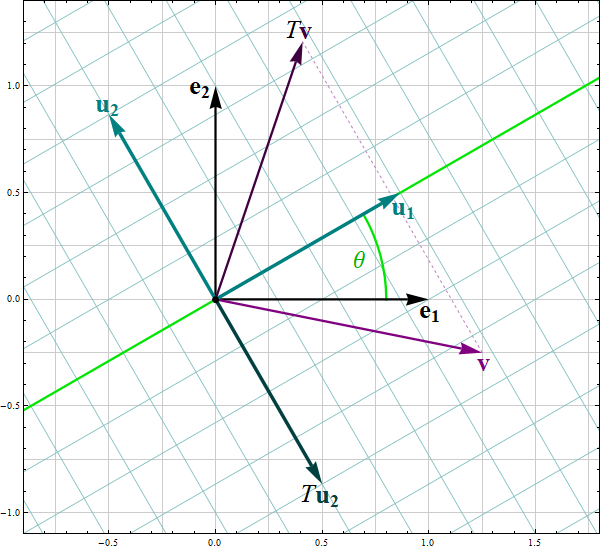

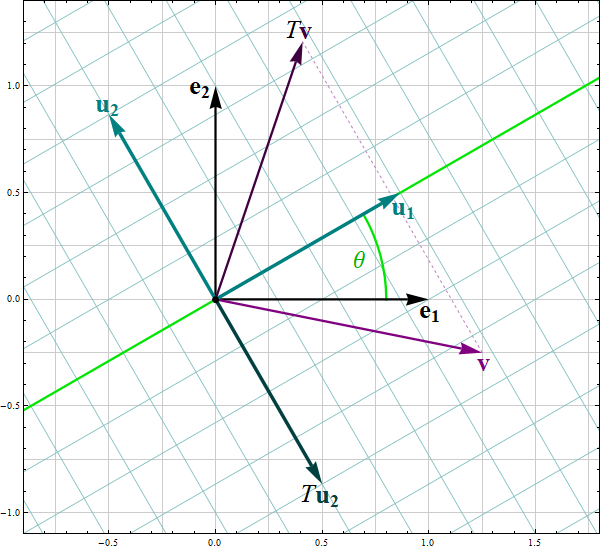

The coordinates $\mathbf{y}$ are the coordinates relative to the basis $\mathcal{B}$ which consists of the blue vectors in the next image

With the change of coordinates

\[

\mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y},

\]

the quadratic form $Q$ simplifies as follows

\[

6 x_1^2 - 4 x_2 x_1 + 3 x_2^2 = 2 y_1^2 + 7 y_2^2.

\]

Clearly $2 y_1^2 + 7 y_2^2 \geq 0$ for all $y_1, y_2 \in \mathbb{R}$ and $2 y_1^2 + 7 y_2^2 = 0$ if and only if $(y_1,y_2) =(0,0).$ Therefore, the given quadratic form is positive definite.

With the change of coordinates

\[

\mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y},

\]

the quadratic form $Q$ simplifies as follows

\[

6 x_1^2 - 4 x_2 x_1 + 3 x_2^2 = 2 y_1^2 + 7 y_2^2.

\]

Clearly $2 y_1^2 + 7 y_2^2 \geq 0$ for all $y_1, y_2 \in \mathbb{R}$ and $2 y_1^2 + 7 y_2^2 = 0$ if and only if $(y_1,y_2) =(0,0).$ Therefore, the given quadratic form is positive definite.

-

The above introduced change of coordinates yields

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 2 y_1^2 + 7 y_2^2 = -1 \bigr\},

\]

(this set is clearly an empty set)

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 0 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 2 y_1^2 + 7 y_2^2 = 0 \bigr\}

\]

(this set clearly consists of the zero vector only)

and

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 2 y_1^2 + 7 y_2^2 = 1 \bigr\}.

\]

The set

\[

\bigl\{ (y_1,y_2) \in \mathbb{R}^2 \, : \, 2 y_1^2 + 7 y_2^2 = 1 \bigr\}

\]

is an ellipse. The vertices of this ellipse in the coordinate system relative to the basis $\mathcal{B}$ are

\[

\text{vertices}: \left(\frac{\sqrt{2}}{2}, 0 \right) , \ \left(-\frac{\sqrt{2}}{2}, 0 \right), \qquad

\text{co-vertices}: \left(0, \frac{\sqrt{7}}{7} \right) , \ \left(0, -\frac{\sqrt{7}}{7}\right).

\]

To get the coordinates of these points in the original coordinate system relative to the basis $\mathcal{E}$ we apply the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$:

\[

\text{vertices}: \left(\frac{\sqrt{10}}{10},\frac{\sqrt{10}}{5} \right) , \ \left(-\frac{\sqrt{10}}{10}, - \frac{\sqrt{10}}{5} \right),

\]

\[

\text{co-vertices}: \left(-\frac{2 \sqrt{35}}{35}, \frac{\sqrt{35}}{35} \right) , \ \left(\frac{2 \sqrt{35}}{35}, -\frac{\sqrt{35}}{35} \right).

\]

- Since the change of coordinate matrices $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ and $\displaystyle \underset{\mathcal{B}\leftarrow\mathcal{E}}{P}$ are orthogonal we have \[ \| \mathbf{y} \|^2 = \mathbf{y}^\top \mathbf{y} = \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right)^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right) = \mathbf{x}^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P}\right)^\top \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x} = \mathbf{x}^\top \mathbf{x} = \|\mathbf{x} \|^2. \] Therefore \[ S = \bigl\{ Q(\mathbf{x}) \, : \, \mathbf{x} \in \mathbb{R}^2, \ \| \mathbf{x} \| = 1 \bigr\} = \bigl\{ 2 y_1^2 + 7 y_2^2 \, : \, y_1^2 + y_2^2 = 1, \ y_1, y_2 \in\mathbb{R} \bigr\}. \] Since \[ 2 = 2 y_1^2 + 2 y_2^2 \leq 2 y_1^2 + 7 y_2^2 \leq 7 y_1^2 + 7 y_2^2 = 7 \] whenever $y_1^2 + y_2^2 = 1$, we have that $\min S = 2$ and $\max S = 7$. The form $2 y_1^2 + 7 y_2^2$ takes the value $2$ when $y_1 = 1, y_2 =0$ and $y_1 = -1, y_2 =0$. Using the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ we conclude that \[ \bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 2 \bigr\} = \left\{ \left[\! \begin{array}{c} \frac{1}{\sqrt{5}} \\ \frac{2}{\sqrt{5}} \end{array} \!\right], - \left[\! \begin{array}{c} \frac{1}{\sqrt{5}} \\ \frac{2}{\sqrt{5}} \end{array} \!\right] \right\}. \] and \[ \bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 7 \bigr\} = \left\{ \left[\! \begin{array}{c} \frac{-2}{\sqrt{5}} \\ \frac{1}{\sqrt{5}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{2}{\sqrt{5}} \\ \frac{-1}{\sqrt{5}} \end{array} \!\right] \right\}. \]

-

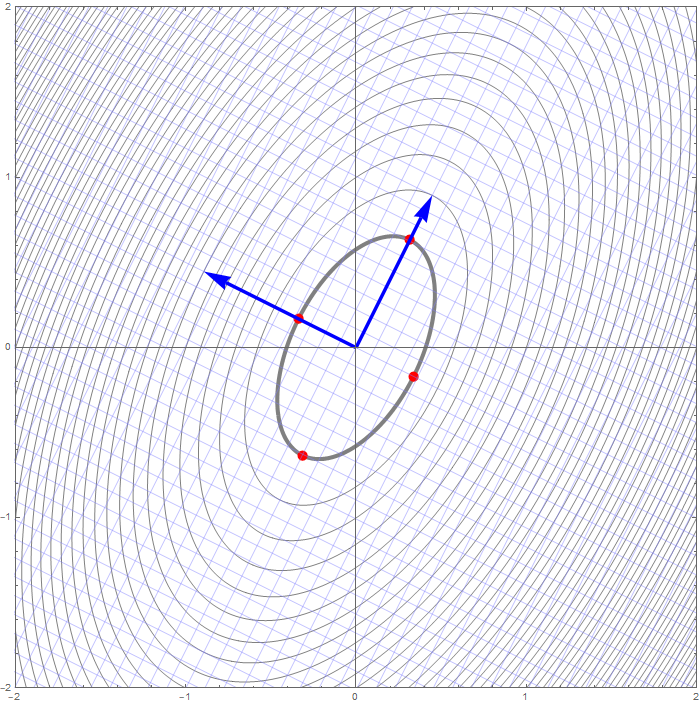

Example 2.

In this item we consider the quadratic form

\[

Q(x_1,x_2) = x_1^2 + 6 x_2 x_1 + x_2^2 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}.

\]

- We have \[ Q(x_1,x_2) = \bigl[ x_1 \ \ x_2 \bigr] \left[\! \begin{array}{cc} 1 & 3 \\ 3 & 1 \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \end{array} \!\right] = x_1^2 + 6 x_2 x_1 + x_2^2 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}. \]

-

Clearly the quadratic form $Q$ is not a zero form. To classify $Q$ as positive semidefinite, negative semidefinite, indefinite we orthogonally diagonalize the matrix of this quadratic form:

\[

\left[\!

\begin{array}{cc}

1 & 3 \\

3 & 1

\end{array}

\!\right] = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{2}}

\end{array}

\!\right] \left[\!

\begin{array}{cc}

4 & 0 \\

0 & -2

\end{array}

\!\right] \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{2}}

\end{array}

\!\right]^\top

\]

Let us introduce two bases

\[

\mathcal{B} = \left\{ \left[\!

\begin{array}{c}

\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}}

\end{array}

\!\right], \left[\!

\begin{array}{c}

-\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}}

\end{array}

\!\right] \right\} \qquad \text{and} \qquad

\mathcal{E} = \left\{ \left[\!

\begin{array}{c} 1 \\ 0 \end{array}

\!\right], \left[\!

\begin{array}{c}

0 \\ 1

\end{array}

\!\right] \right\}.

\]

The above orthogonal diagonalization suggests a very useful change of coordinates:

\[

\mathbf{y} = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{2}}

\end{array}

\!\right]^\top \mathbf{x} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \left[\!

\begin{array}{cc}

\frac{1}{\sqrt{2}} & -\frac{1}{\sqrt{2}} \\

\frac{1}{\sqrt{2}} & \frac{1}{\sqrt{2}}

\end{array}

\!\right] \mathbf{y} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}.

\]

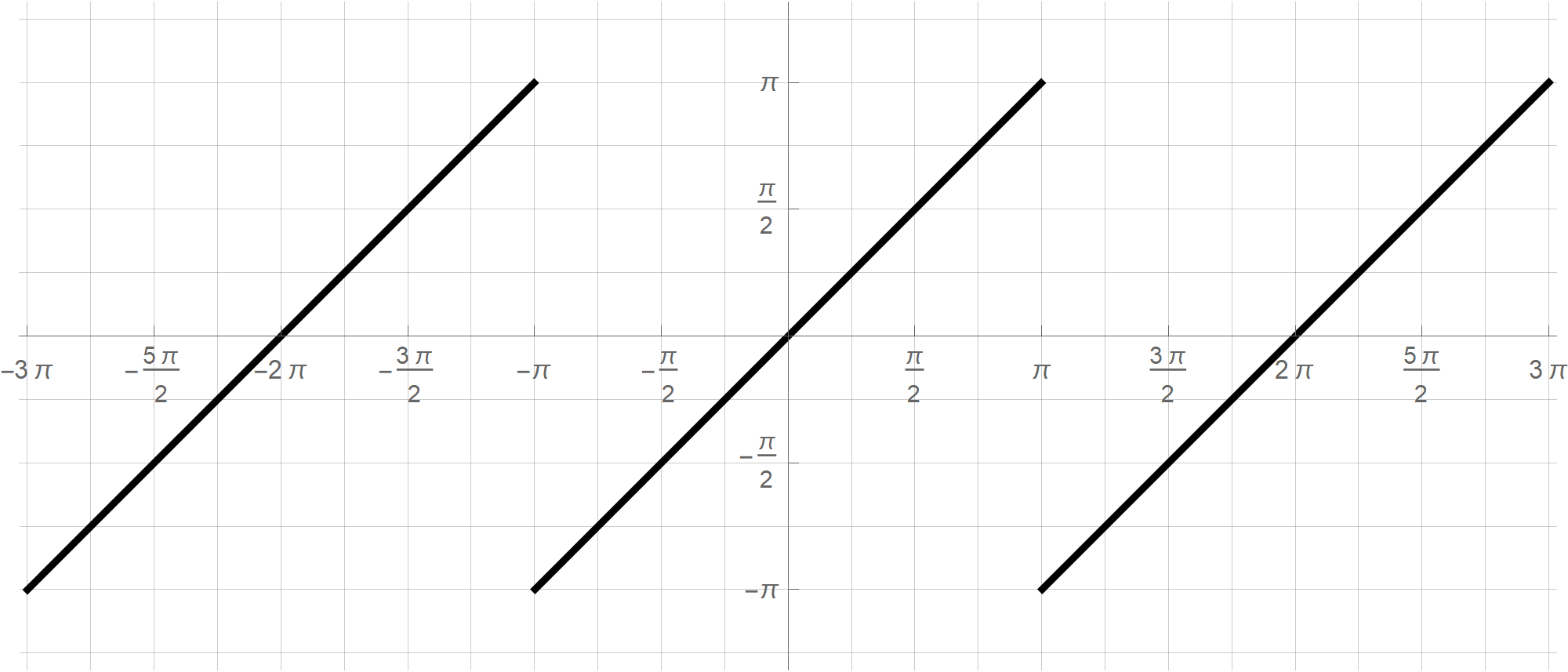

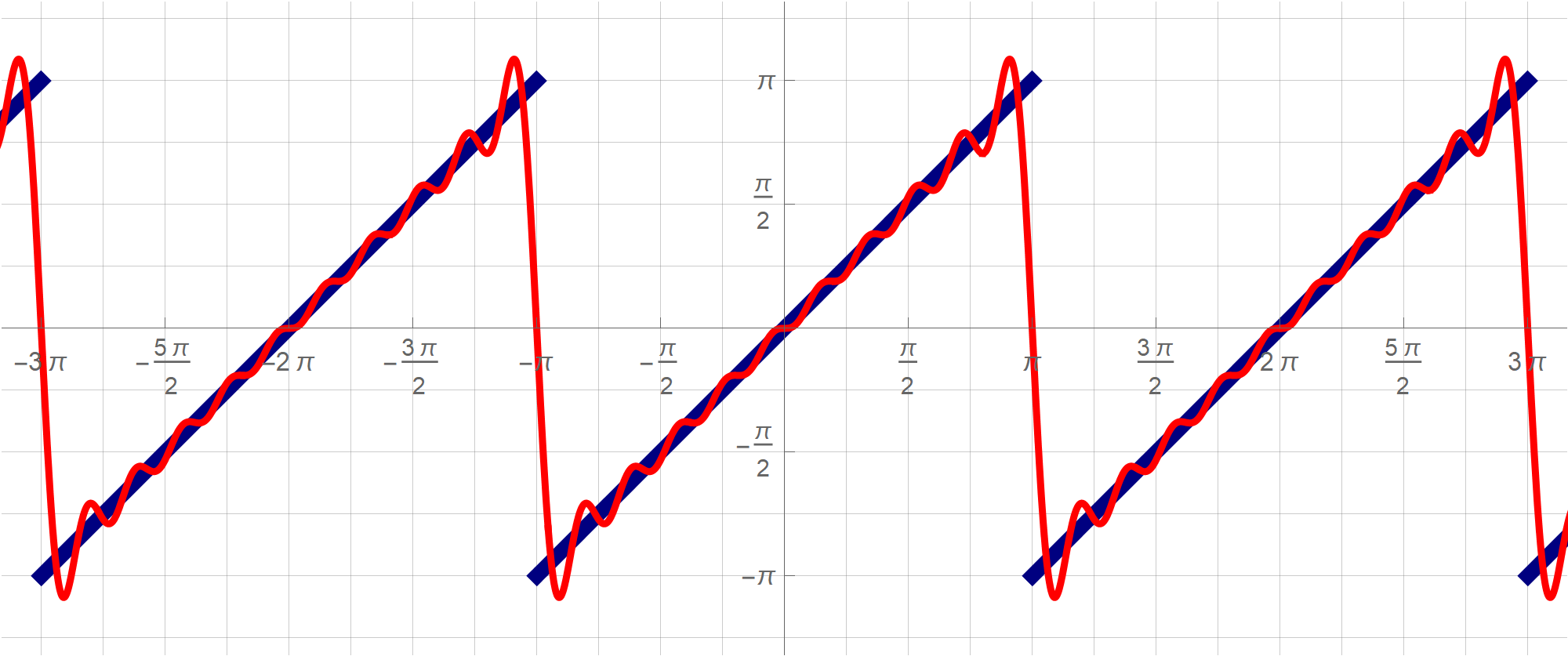

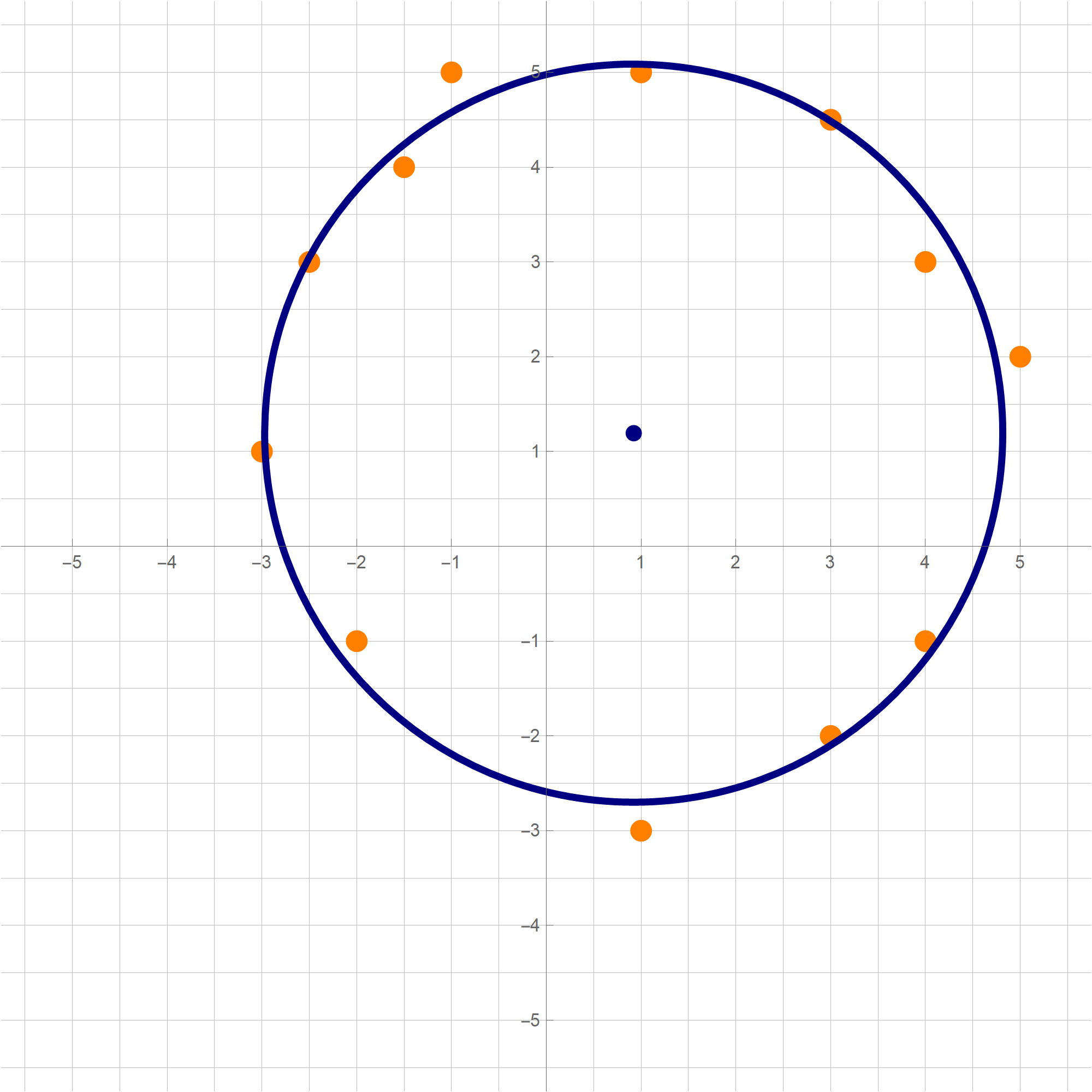

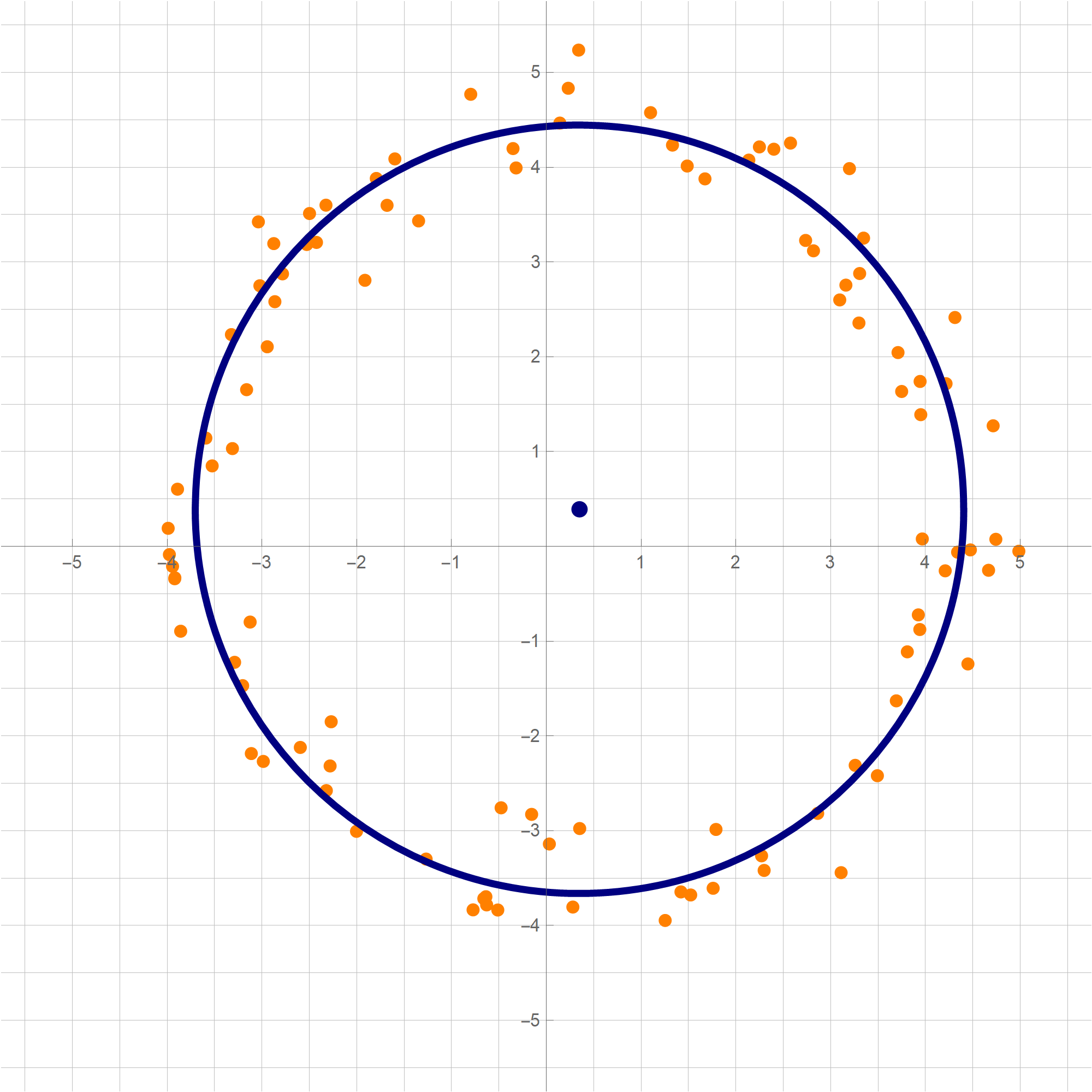

The coordinates $\mathbf{y}$ are the coordinates relative to the basis $\mathcal{B}$ which consists of the blue vectors in the next image

With the change of coordinates

\[

\mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y},

\]

the quadratic form $Q$ simplifies as follows

\[

x_1^2 + 6 x_2 x_1 + x_2^2 = 4 y_1^2 - 2 y_2^2.

\]

Clearly $4 y_1^2 - 2 y_2^2$ is an indefinite form taking the value $4$ at $(y_1,y_2) = (1,0)$ and the value $-2$ at $(y_1,y_2) = (0,1).$

With the change of coordinates

\[

\mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad

\mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y},

\]

the quadratic form $Q$ simplifies as follows

\[

x_1^2 + 6 x_2 x_1 + x_2^2 = 4 y_1^2 - 2 y_2^2.

\]

Clearly $4 y_1^2 - 2 y_2^2$ is an indefinite form taking the value $4$ at $(y_1,y_2) = (1,0)$ and the value $-2$ at $(y_1,y_2) = (0,1).$

-

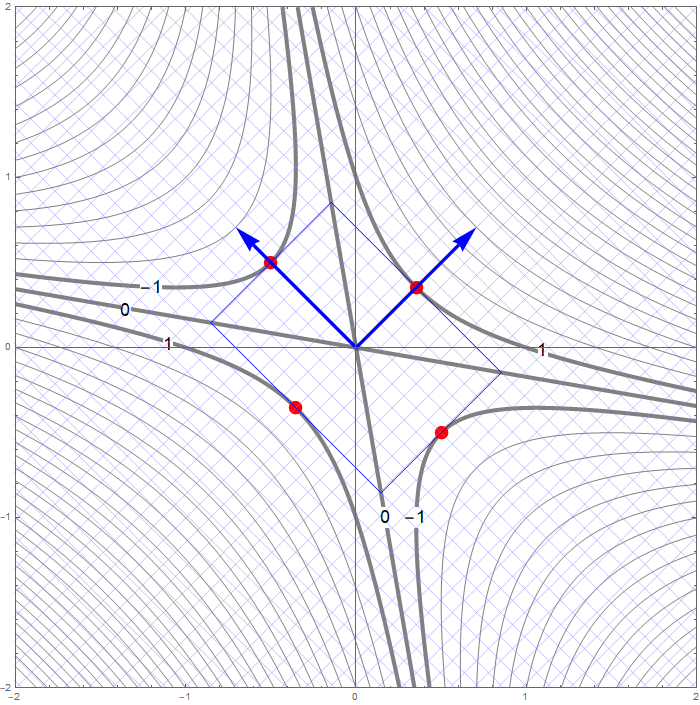

The above introduced change of coordinates yields

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = -1 \bigr\},

\]

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 0 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = 0 \bigr\}

\]

and

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = 1 \bigr\}.

\]

The set

\[

\bigl\{ (y_1,y_2) \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = 1 \bigr\}

\]

is a hyperbola. The vertices of this hyperbola in the coordinate system relative to the basis $\mathcal{B}$ are

\[

\text{vertices}: \left(\frac{1}{2}, 0 \right) , \ \left(-\frac{1}{2}, 0 \right)

\]

and the asymptotes of this hyperbola are two lines which are determined by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{2} \\ \frac{\sqrt{2}}{2}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{1}{2} \\ -\frac{\sqrt{2}}{2}

\end{array}

\!\right].

\]

To get the coordinates of the vertices in the original coordinate system relative to the basis

$\mathcal{E}$ we apply the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$:

\[

\text{vertices}: \left(\frac{\sqrt{2}}{4},\frac{\sqrt{2}}{4} \right) , \ \left(-\frac{\sqrt{2}}{4},-\frac{\sqrt{2}}{4} \right),

\]

and the asymptotes are determined by the vectors

\[

\left[\!

\begin{array}{c}

\frac{-2+\sqrt{2}}{4} \\ \frac{2+\sqrt{2}}{4}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{2+\sqrt{2}}{4} \\ \frac{-2+\sqrt{2}}{4}

\end{array}

\!\right].

\]

The set

\[

\bigl\{ (y_1,y_2) \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = 0 \bigr\}

\]

is a union of two lines that go through the origin. These lines are the asymptotes of the preceding hyperbola and are determined by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{2} \\ \frac{\sqrt{2}}{2}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{1}{2} \\ -\frac{\sqrt{2}}{2}

\end{array}

\!\right],

\]

in the coordinates relative to the basis $\mathcal{B}$. To get the coordinates in the original coordinate system relative to the basis $\mathcal{E}$ we apply the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$:

\[

\left[\!

\begin{array}{c}

\frac{-2+\sqrt{2}}{4} \\ \frac{2+\sqrt{2}}{4}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{2+\sqrt{2}}{4} \\ \frac{-2+\sqrt{2}}{4}

\end{array}

\!\right].

\]

The set

\[

\bigl\{ (y_1,y_2) \in \mathbb{R}^2 \, : \, 4 y_1^2 - 2 y_2^2 = -1 \bigr\}

\]

is a hyperbola. The vertices of this hyperbola in the coordinate system relative to the basis $\mathcal{B}$ are

\[

\text{vertices}: \left(0, \frac{\sqrt{2}}{2}\right) , \ \left(0, -\frac{\sqrt{2}}{2} \right)

\]

and the asymptotes of this hyperbola are two lines which are determined by the vectors

\[

\left[\!

\begin{array}{c}

\frac{1}{2} \\ \frac{\sqrt{2}}{2}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{1}{2} \\ -\frac{\sqrt{2}}{2}

\end{array}

\!\right].

\]

To get the coordinates of the the vertices in the original coordinate system relative to the basis $\mathcal{E}$ we apply the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$:

\[

\text{vertices}: \left(-\frac{1}{2},\frac{1}{2} \right) , \ \left(\frac{1}{2}, -\frac{1}{2} \right),

\]

and the asymptotes are determined by the vectors

\[

\left[\!

\begin{array}{c}

\frac{-2+\sqrt{2}}{4} \\ \frac{2+\sqrt{2}}{4}

\end{array}

\!\right] \qquad \text{and} \qquad \left[\!

\begin{array}{c}

\frac{2+\sqrt{2}}{4} \\ \frac{-2+\sqrt{2}}{4}

\end{array}

\!\right].

\]

- Since the change of coordinate matrices $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ and $\displaystyle \underset{\mathcal{B}\leftarrow\mathcal{E}}{P}$ are orthogonal we have \[ \| \mathbf{y} \|^2 = \mathbf{y}^\top \mathbf{y} = \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right)^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right) = \mathbf{x}^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P}\right)^\top \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x} = \mathbf{x}^\top \mathbf{x} = \|\mathbf{x} \|^2. \] Therefore \[ S = \bigl\{ Q(\mathbf{x}) \, : \, \mathbf{x} \in \mathbb{R}^2, \ \| \mathbf{x} \| = 1 \bigr\} = \bigl\{ 4 y_1^2 - 2 y_2^2 \, : \, y_1^2 + y_2^2 = 1, \ y_1, y_2 \in\mathbb{R} \bigr\}. \] Since \[ -2 = -2 y_1^2 - 2 y_2^2 \leq 4 y_1^2 - 2 y_2^2 \leq 4 y_1^2 + 4 y_2^2 = 4 \] whenever $y_1^2 + y_2^2 = 1$, we have that $\min S = -2$ and $\max S = 4$. The form $4 y_1^2 - 2 y_2^2$ takes the value $-2$ when $y_1 = 0, y_2 = 1$ or $y_1 = 0, y_2 =-1$ and the value $4$ when $y_1 = 1, y_2 = 0$ or $y_1 = -1, y_2 =0$. Using the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ we conclude that \[ \bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = -2 \bigr\} = \left\{ \left[\! \begin{array}{c} -\frac{1}{\sqrt{2}} \\ \frac{1}{\sqrt{2}} \end{array} \!\right], \left[\! \begin{array}{c} \frac{1}{\sqrt{2}} \\ -\frac{1}{\sqrt{2}} \end{array} \!\right] \right\}. \] and \[ \bigl\{ \mathbf{x} \in \mathbb{R}^2 \, : \, Q(\mathbf{x}) = 4 \bigr\} = \left\{ \left[\! \begin{array}{c} \frac{1}{\sqrt{2}} \\ \frac{1}{\sqrt{2}} \end{array} \!\right], \left[\! \begin{array}{c} -\frac{1}{\sqrt{2}} \\ -\frac{1}{\sqrt{2}} \end{array} \!\right] \right\}. \]

-

Example 3.

In this item we consider the quadratic form

\[

Q(x_1, x_2, x_3) = x_1^2 - 4 x_1 x_2 +4 x_2 x_3 - x_3^2 \quad \text{where} \quad x_1, x_2 \in \mathbb{R}.

\]

- We have \[ Q(x_1, x_2, x_3) = \bigl[ x_1 \ \ x_2 \ \ x_3 \bigr] \left[\! \begin{array}{ccc} 1 & -2 & 0 \\ -2 & 0 & 2 \\ 0 & 2 & -1 \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \\ x_3 \end{array} \!\right] = x_1^2 - 4 x_1 x_2 +4 x_2 x_3 - x_3^2 \quad \text{where} \quad x_1, x_2, x_3 \in \mathbb{R}. \]

- Clearly the quadratic form $Q$ is not a zero form. To classify $Q$ as positive semidefinite, negative semidefinite, indefinite we orthogonally diagonalize the matrix of this quadratic form: \[ \left[\! \begin{array}{ccc} 1 & -2 & 0 \\ -2 & 0 & 2 \\ 0 & 2 & -1 \end{array} \!\right] = \left[\!\begin{array}{ccc} -\frac{1}{3} & -\frac{2}{3} & \frac{2}{3} \\ -\frac{2}{3} & \frac{2}{3} & \frac{1}{3} \\ \frac{2}{3} & \frac{1}{3} & \frac{2}{3} \\ \end{array} \!\right] \left[\! \begin{array}{ccc} -3 & 0 & 0 \\ 0 & 3 & 0 \\ 0 & 0 & 0 \end{array} \!\right] \left[\!\begin{array}{ccc} -\frac{1}{3} & -\frac{2}{3} & \frac{2}{3} \\ -\frac{2}{3} & \frac{2}{3} & \frac{1}{3} \\ \frac{2}{3} & \frac{1}{3} & \frac{2}{3} \\ \end{array} \!\right]^\top \] Let us introduce two bases \[ \mathcal{B} = \left\{ \left[\! \begin{array}{c} -\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3} \end{array} \!\right], \left[\! \begin{array}{c} -\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3} \end{array} \!\right], \left[\! \begin{array}{c} \frac{2}{3} \\ \frac{1}{3} \\ \frac{2}{3} \end{array} \!\right] \right\} \qquad \text{and} \qquad \mathcal{E} = \left\{ \left[\! \begin{array}{c} 1 \\ 0 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 1 \\ 0 \end{array} \!\right], \left[\! \begin{array}{c} 0 \\ 0 \\ 1 \end{array} \!\right] \right\}. \] The above orthogonal diagonalization suggests a very useful change of coordinates: \[ \mathbf{y} = \left[\!\begin{array}{ccc} -\frac{1}{3} & -\frac{2}{3} & \frac{2}{3} \\ -\frac{2}{3} & \frac{2}{3} & \frac{1}{3} \\ \frac{2}{3} & \frac{1}{3} & \frac{2}{3} \\ \end{array} \!\right]^\top \mathbf{x} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \left[\!\begin{array}{ccc} -\frac{1}{3} & -\frac{2}{3} & \frac{2}{3} \\ -\frac{2}{3} & \frac{2}{3} & \frac{1}{3} \\ \frac{2}{3} & \frac{1}{3} & \frac{2}{3} \\ \end{array} \!\right] \mathbf{y} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}. \] The coordinates $\mathbf{y}$ are the coordinates relative to the basis $\mathcal{B}$ With the change of coordinates \[ \mathbf{y} = \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}, \qquad \mathbf{x} = \underset{\mathcal{E}\leftarrow\mathcal{B}}{P} \mathbf{y}, \] the quadratic form $Q$ simplifies as follows \[ x_1^2 - 4 x_1 x_2 +4 x_2 x_3 - x_3^2 = - 3 y_1^2 + 3 y_2^2. \] Clearly the form $- 3 y_1^2 + 3 y_2^2$ is an indefinite form taking the value $-3$ at $(y_1,y_2,y_3) = (1,0,0)$ and the value $3$ at $(y_1,y_2,y_3) = (0,1,0).$

-

The above introduced change of coordinates yields

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = -1 \bigr\},

\]

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 0 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = 0 \bigr\},

\]

and

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = 1 \bigr\}.

\]

The set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 0 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = 0 \bigr\}

\]

is a union of two planes. These planes are represented by the following two spans:

\[

\operatorname{Span} \left\{

\left[\!

\begin{array}{c}

1 \\ 1 \\ 0

\end{array}

\!\right],

\left[\!

\begin{array}{c}

0 \\ 0 \\ 1

\end{array}

\!\right]

\right\} \qquad \text{and} \qquad \operatorname{Span} \left\{

\left[\!

\begin{array}{c}

1 \\ -1 \\ 0

\end{array}

\!\right],

\left[\!

\begin{array}{c}

0 \\ 0 \\ 1

\end{array}

\!\right]

\right\}

\]

in the coordinates relative to the basis $\mathcal{B}$. To get the spans of the vertices in the original coordinate system relative to the basis $\mathcal{E}$ we apply the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$:

\[

\operatorname{Span} \left\{

\left[\!

\begin{array}{c}

-1 \\ 0 \\ 1

\end{array}

\!\right],

\left[\!

\begin{array}{c}

2 \\ 1 \\ 2

\end{array}

\!\right]

\right\} \qquad \text{and} \qquad \operatorname{Span} \left\{

\left[\!

\begin{array}{c}

1 \\ -4 \\ 1

\end{array}

\!\right],

\left[\!

\begin{array}{c}

2 \\ 1 \\ 2

\end{array}

\!\right]

\right\}

\]

The set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = 1 \bigr\}

\]

is a hyperbolic cylinder. The equation $- 3 y_1^2 + 3 y_2^2 = 1$ represents a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

-\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3}

\end{array}

\!\right] \quad \text{and} \quad \left[\!

\begin{array}{c}

-\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3}

\end{array}

\!\right] \quad \text{coordinates relative to} \quad \mathcal{E}.

\]

The cylinder is formed by the parallel lines that go through the points on the hyperbola and are orthogonal to the plane spanned by the above two vectors.

The set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = -1 \bigr\}

\]

is a hyperbolic cylinder. The equation $- 3 y_1^2 + 3 y_2^2 = -11$ represents a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

-\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3}

\end{array}

\!\right] \quad \text{and} \quad \left[\!

\begin{array}{c}

-\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3}

\end{array}

\!\right] \quad \text{coordinates relative to} \quad \mathcal{E}.

\]

The cylinder is formed by the parallel lines that go through the points on the hyperbola and are orthogonal to the plane spanned by the above two vectors.

The set

\[

\bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -1 \bigr\} = \bigl\{ \mathbf{y} \in \mathbb{R}^3 \, : \, - 3 y_1^2 + 3 y_2^2 = -1 \bigr\}

\]

is a hyperbolic cylinder. The equation $- 3 y_1^2 + 3 y_2^2 = -11$ represents a hyperbola in the plane spanned by the vectors

\[

\left[\!

\begin{array}{c}

-\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3}

\end{array}

\!\right] \quad \text{and} \quad \left[\!

\begin{array}{c}

-\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3}

\end{array}

\!\right] \quad \text{coordinates relative to} \quad \mathcal{E}.

\]

The cylinder is formed by the parallel lines that go through the points on the hyperbola and are orthogonal to the plane spanned by the above two vectors.

- Since the change of coordinate matrices $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ and $\displaystyle \underset{\mathcal{B}\leftarrow\mathcal{E}}{P}$ are orthogonal we have \[ \| \mathbf{y} \|^2 = \mathbf{y}^\top \mathbf{y} = \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right)^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x}\right) = \mathbf{x}^\top \left(\underset{\mathcal{B}\leftarrow\mathcal{E}}{P}\right)^\top \underset{\mathcal{B}\leftarrow\mathcal{E}}{P} \mathbf{x} = \mathbf{x}^\top \mathbf{x} = \|\mathbf{x} \|^2. \] Therefore \[ S = \bigl\{ Q(\mathbf{x}) \, : \, \mathbf{x} \in \mathbb{R}^3, \ \| \mathbf{x} \| = 1 \bigr\} = \bigl\{ -3 y_1^2 + 3 y_2^2 \, : \, y_1^2 + y_2^2 + y_3^2 = 1, \ y_1, y_2, y_3 \in\mathbb{R} \bigr\}. \] Since \[ -3 = -3 y_1^2 - 3 y_2^2 - 3 y_3^2 \leq -3 y_1^2 + 3 y_2^2 \leq 3 y_1^2 + 3 y_2^2 + 3 y_3^2 = 3 \] whenever $y_1^2 + y_2^2 + y_3^2 = 1$, we have that $\min S = -3$ and $\max S = 3$. The form $-3 y_1^2 + 3 y_2^2$ takes the value $-3$ when $y_1 = 1, y_2 = 0$ or $y_1 = -1, y_2 =0$ and the value $3$ when $y_1 = 0, y_2 = 1$ or $y_1 = 0, y_2 =-1$. Using the change of coordinates matrix $\displaystyle \underset{\mathcal{E}\leftarrow\mathcal{B}}{P}$ we conclude that \[ \bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = -3 \bigr\} = \left\{ \left[\! \begin{array}{c} -\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3} \end{array} \!\right], -\left[\! \begin{array}{c} -\frac{1}{3} \\ -\frac{2}{3} \\ \frac{2}{3} \end{array} \!\right] \right\}. \] and \[ \bigl\{ \mathbf{x} \in \mathbb{R}^3 \, : \, Q(\mathbf{x}) = 3 \bigr\} = \left\{ \left[\! \begin{array}{c} -\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3} \end{array} \!\right], - \left[\! \begin{array}{c} -\frac{2}{3} \\ \frac{2}{3} \\ \frac{1}{3} \end{array} \!\right] \right\}. \]

- Suggested problems for Section 7.2: 1, 3, 5, 7, 9, 13, 17, 19, 20, 21, 23, 25

- In Sections 7.2 and 7.3 we study quadratic forms.

-

A quadratic form in $n$ variables is a special kind of function $Q:\mathbb{R}^n \to \mathbb{R}.$ Below are few examples of quadratic forms

- Below are three specific quadratic forms in two variables: \[ Q(x_1,x_2) = 6 x_1^2 - 4 x_1 x_2 + 3 x_2^2, \qquad (x_1,x_2) \in \mathbb{R}^2 \] \[ Q(x_1,x_2) = x_1^2 + 6 x_1 x_2 + x_2^2, \qquad (x_1,x_2) \in \mathbb{R}^2 \] \[ Q(x_1,x_2) = 4 x_1^2 + 4 x_1 x_2 + x_2^2, \qquad (x_1,x_2) \in \mathbb{R}^2 \] In general, a quadratic form $Q$ in two variables $x_1,x_2$ is a function defined on $\mathbb{R}^2$ with the values in $\mathbb{R}$ which can be expressed as \[ Q(x_1,x_2) = a\, x_1x_1 + b\, x_1x_2 + c\, x_2x_2, \qquad (x_1,x_2) \in \mathbb{R}^2, \] where $a, b, c$ are real coefficients.

- Below are three specific quadratic forms in three variables: \[ Q(x_1,x_2,x_3) = x_1^2 -4x_1 x_2 +4 x_2 x_3 - x_3^2, \qquad (x_1,x_2,x_3) \in \mathbb{R}^3, \] \[ Q(x_1,x_2,x_3) = 4x_1 x_2 + 2 x_1 x_3 + 3 x_2^2 + 4 x_2 x_3, \qquad (x_1,x_2,x_3) \in \mathbb{R}^3, \] \[ Q(x_1,x_2,x_3) = 2 x_1^2 + 2 x_1 x_2 + 2 x_1 x_3 + 2 x_2^2 + 2 x_2 x_3 + 2 x_3^2, \qquad (x_1,x_2,x_3) \in \mathbb{R}^3, \] In general, a quadratic form $Q$ in three variables $x_1,x_2,x_3$ is a function defined on $\mathbb{R}^3$ with the values in $\mathbb{R}$ which can be expressed as \[ Q(x_1,x_2,x_3) = a\, x_1x_1 + b\, x_1x_2 + c\, x_1x_3 + d\, x_2 x_2 + e\, x_2 x_3 + f\, x_3 x_3, \quad (x_1,x_2,x_3) \in \mathbb{R}^3, \] where $a, b, c, d, e, f$ are real coefficients.

- A quadratic form $Q$ in four variables $x_1,x_2,x_3,x_4$ is a function defined on $\mathbb{R}^4$ with the values in $\mathbb{R}$ which is a linear combination of the following ten terms \[ x_1x_1, \quad x_1x_2, \quad x_1x_3, \quad x_1 x_4, \quad x_2 x_2, \quad x_2 x_3, \quad x_2x_4, \quad x_3 x_3, \quad x_3 x_4, \quad x_4 x_4. \] In other words, a quadratic form in four variables is a polynomial in four variables which contains only terms of degree $2.$

- In general, a quadratic form in $n$ variables is a polynomial in $n$ variables which contains only terms of degree $2.$ To be more specific, for $j, k \in \{1,\ldots,n\}$ with $j \leq k$ let us define the functions $q_{jk}:\mathbb{R}^n \to \mathbb{R}$ by \[ q_{jk}(\mathbf{x}) = x_j x_k, \qquad \mathbf{x} = (x_1,\ldots,x_n) \in \mathbb{R}^n. \] Notice that there are $\binom{n+1}{2} = \frac{n(n+1)}{2}$ such functions. A linear combination of the functions $q_{jk}(\mathbf{x})$ with $j, k \in \{1,\ldots,n\}$ with $j \leq k$, is called a quadratic form in $n$ variables.

- For us, the most important fact about quadratic forms is that for each quadratic form $Q$ in $n$ variables there exists a unique symmetric $n\!\times\!n$ matrix $A$ such that \[ Q(\mathbf{x}) = \mathbf{x} \cdot (A\mathbf{x}) = \mathbf{x}^\top A\mathbf{x} \quad \text{for all} \quad \mathbf{x} \in \mathbb{R}^n. \] Such matrix $A$ is called the matrix of a quadratic form.

- In the above example, for all $(x_1,x_2) \in \mathbb{R}^2$ we have \[ Q(x_1,x_2) = a\, x_1x_1 + b\, x_1x_2 + c\, x_2x_2 = \left[\! \begin{array}{c} x_1 \\ x_2 \end{array} \!\right]^\top \left(\left[\! \begin{array}{cc} a & b/2 \\ b/2 & c \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \end{array} \!\right] \right) \] And for all $(x_1,x_2,x_3) \in \mathbb{R}^3$ we have \begin{align*} Q(x_1,x_2,x_3) &= a\, x_1x_1 + b\, x_1x_2 + c\, x_1x_3 + d\, x_2 x_2 + e\, x_2 x_3 + f\, x_3 x_3 \\ & = \left[\! \begin{array}{c} x_1 \\ x_2 \\ x_3 \end{array} \!\right]^\top \left(\left[\! \begin{array}{ccc} a & b/2 & c/2 \\ b/2 & d & e/2 \\ c/2 & e/2 & f \end{array} \!\right] \left[\! \begin{array}{c} x_1 \\ x_2 \\ x_3 \end{array} \!\right] \right) \end{align*}

-

In this item I will write about polychotomies in mathematics. A polychotomy is a partition of a given set of mathematical objects into disjoint classes which are all given distinct names.

-

A dichotomy is a partition of a given set of mathematical objects into two disjoint classes each of which is given a name. The following are examples of dichotomies.

- The most important dichotomy for numbers is the partition of numbers into the singleton set $\{0\}$ consisting of only zero and the set of all nonzero numbers. Further, dichotomy for the nonzero real numbers is the partition of the nonzero real numbers into positive real numbers and negative real numbers.

- An important dichotomy for the set of real numbers is the partition into rational and irrational numbers.

- A useful dichotomy for complex numbers is the partition of the complex numbers into the real and nonreal numbers. A complex number $z$ is said to be nonreal if the imaginary part of $z$ is nonzero.

- Consider the set of all square matrices. A square matrix $M$ is said to be singular if $\det M = 0.$ A square matrix $M$ is said to be nonsingular if $\det M \neq 0.$ You also learned that a square matrix is invertible if and only if it is nonsingular. Thus, singular-invertible is a dichotomy for square matrices.

-

A trichotomy is a partition of a given set of mathematical objects into three disjoint classes each of which is given a name. The following are examples of trichotomies.

- The most important trichotomy for the set of real numbers is the partition of numbers into singleton set $\{0\}$ consisting of only zero, the set of positive real numbers and the set of negative real numbers. As we mention before this trichotomy arrises as two dichotomies.

- In high school you learned about the trichotomy involving quadratic equations $a x^2 + b x + c = 0$ with $a\neq 0.$ Such equation can have: no solutions, exactly one solution, and exactly two solutions.

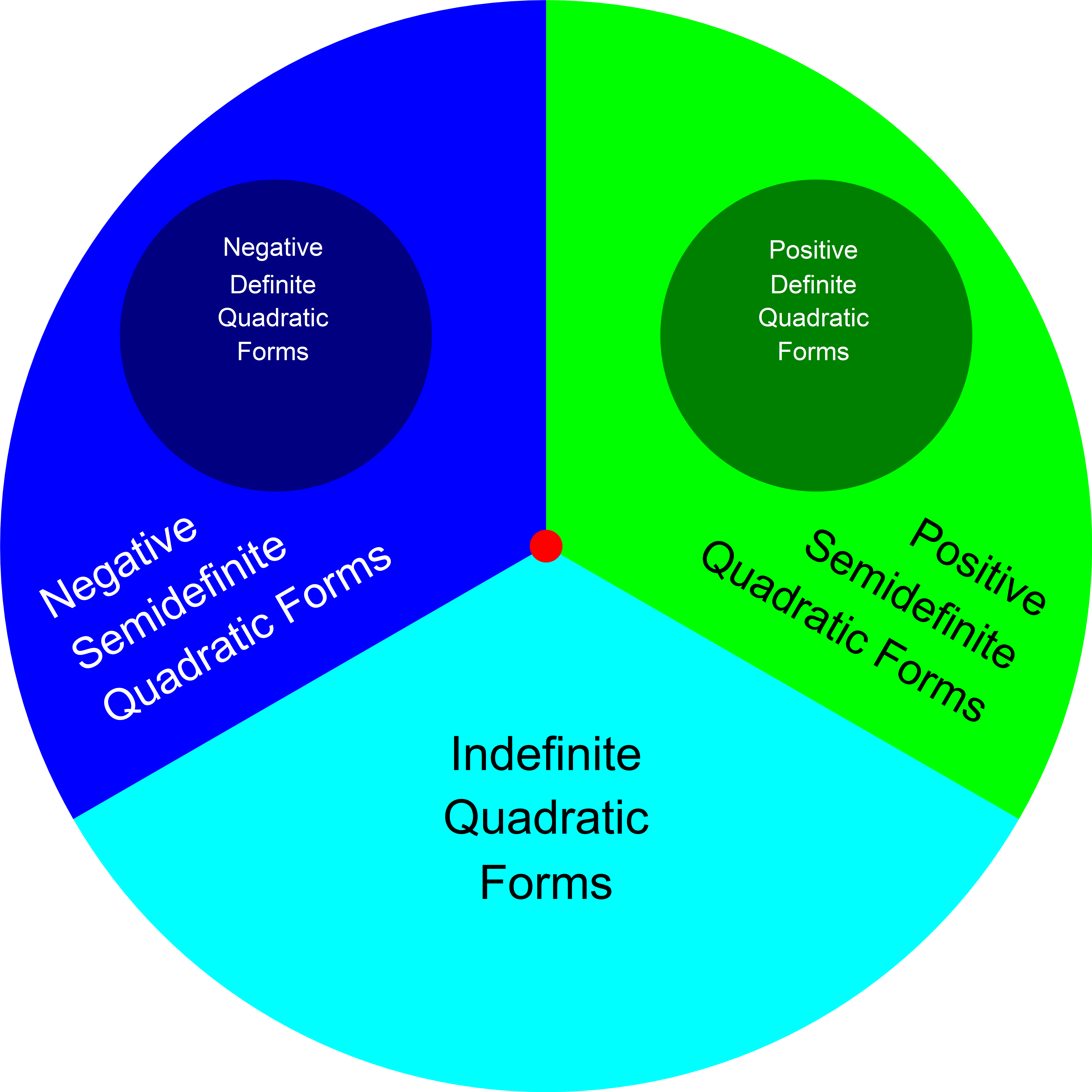

- A quadruplicity is a partition of a given set of mathematical objects into four disjoint classes each of which is given a name. I started writing about polychotomies because of the quadruplicity which arises with quadratic forms. I define that quadruplicity in the next item.

-

A dichotomy is a partition of a given set of mathematical objects into two disjoint classes each of which is given a name. The following are examples of dichotomies.

-

Let $Q : \mathbb R^n \to \mathbb R$ be a quadratic form. We distinguish the following four types of quadratic forms: